Rules to Better Bots - 7 Rules

Bots promote an effective and productive work place. Many companies are already using them more than regular 'search' functionality.

Want to have a business grade bot powered by serious AI? Check SSW's Bots consulting page.

When users jump onto a website, they may want to find out the answer to some questions but aren't sure where to look. Bots are an awesome way to give users quick answers to simple or repetitive questions. There are many different frameworks out there that you can use to build your bots such as Microsoft Bot Framework and Google Dialogflow, but which is the best one...

All of the bot frameworks have advantages but generally the main point of differentiation is the integration with different suites of products. For example, Microsoft Bot Framework has smooth integration with Microsoft Teams. On the other hand, Google Dialog Flow works great with Google Assistant and Slack.

If you are focused mostly on Microsoft products then it is a safe bet that Microsoft Bot Framework is a good choice for your organisation.

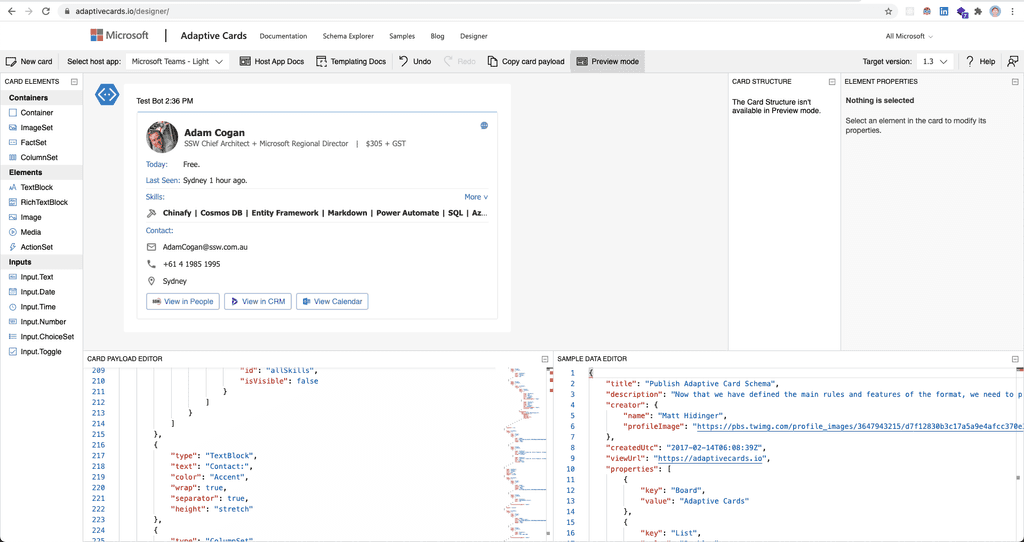

Microsoft Bot Framework also integrates with LUIS and provides access to the Adaptive Cards Designer so there are a lot of great reasons to use it for your next implementation.

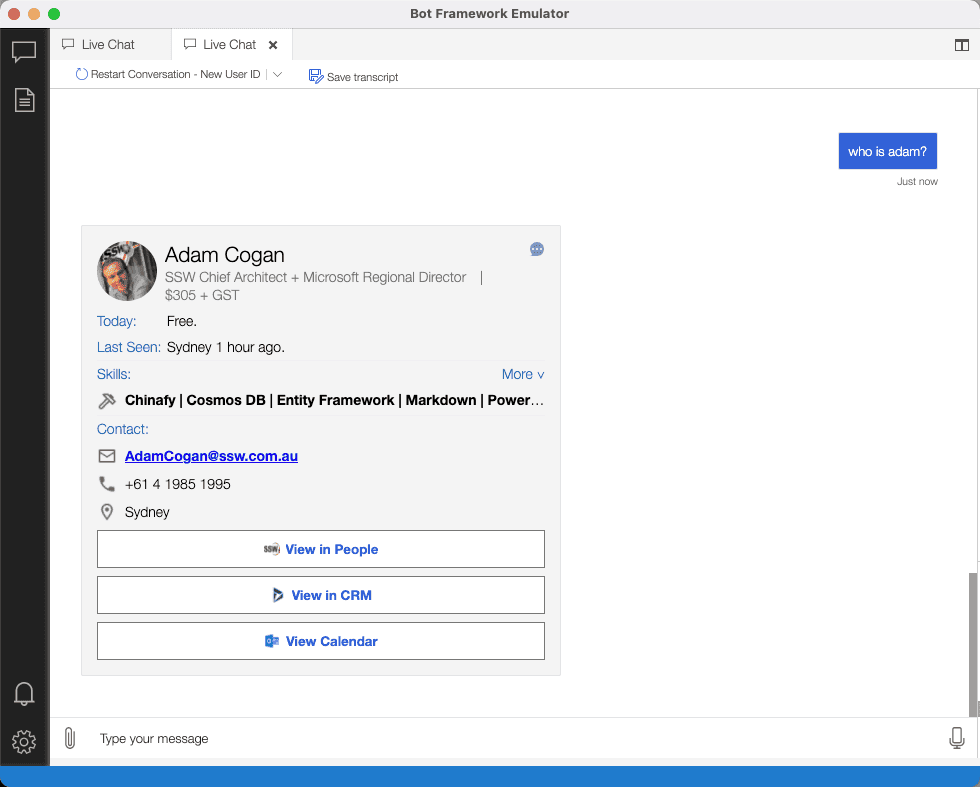

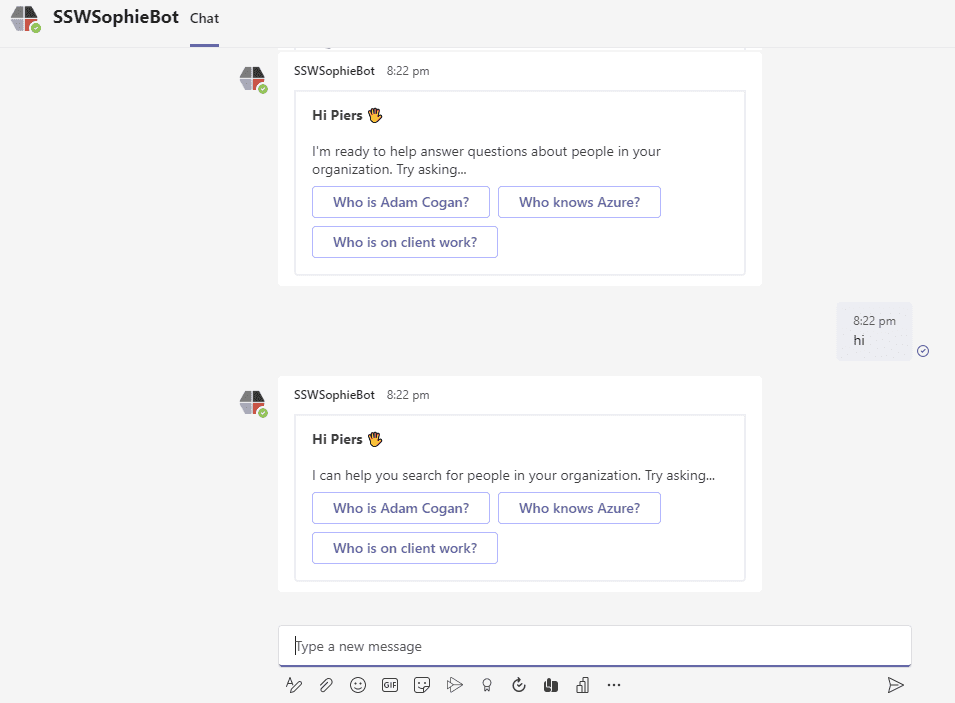

SSW SophieBot is a product currently using Microsoft Bot Framework to make searching for employee skills and availability super easy!

When building a chat bot, it needs some way to understand natural language text to simulate a person and provide a conversational experience for users. If you are using Microsoft Bot Framework, you can use LUIS. LUIS is a natural language processing service and it provides some awesome benefits...

- Built-in support from Microsoft Bot Framework

- Well-trained prebuilt entities (e.g. Person names, date and time, geographic locations)

- A user friendly GUI portal where you can create, test and publish LUIS apps with just a couple of clicks

Intents and User Utterances

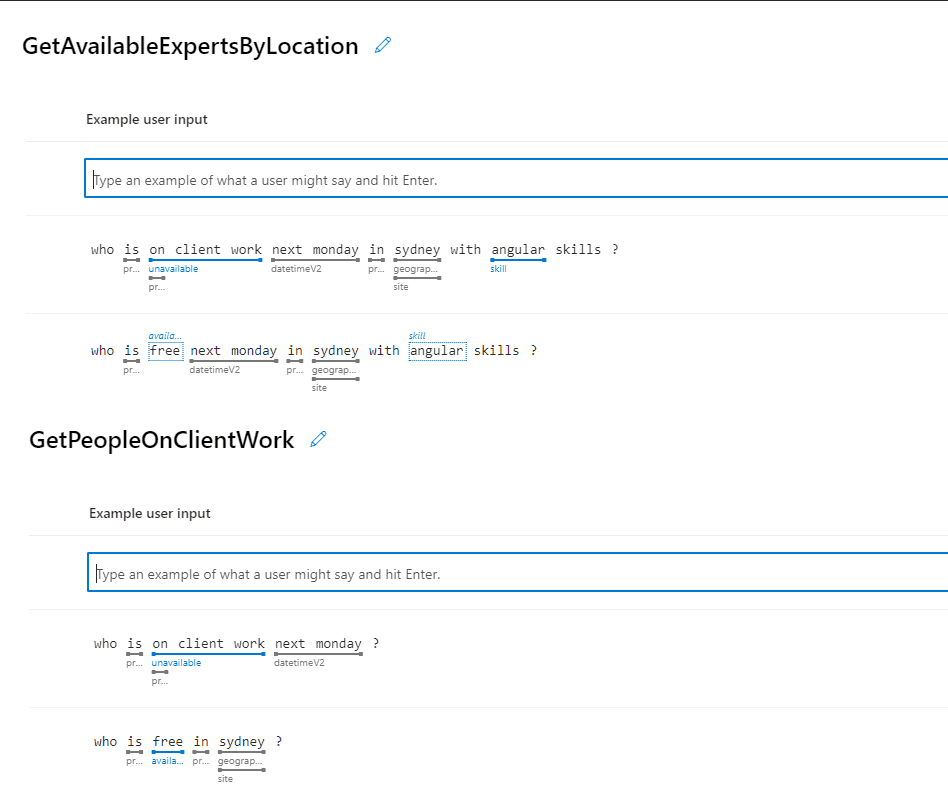

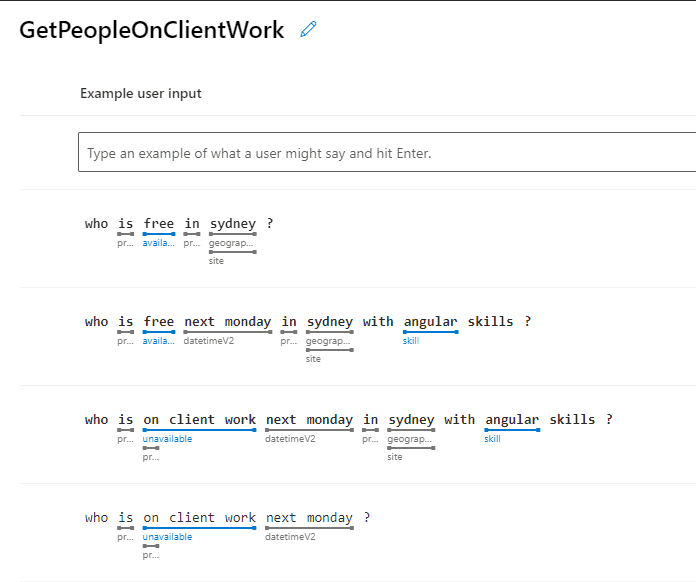

To build a LUIS application, you need to classify different utterances that a user might ask into specific "intents". For example, a user might want to ask "Who is working on SophieBot" in many different ways (e.g. "Show me people working on SophieBot"), so you should make this an intent so that the different ways of wording it are treated the same way.

Entities

Sometimes you may need to get different parts of an intent, so that you can retrieve extra data from an API endpoint or perform some other kind of custom logic. In that case, LUIS needs some way to figure out what the different subjects are in an intent, entities enable you to do this. For example, if the user asks "Who is working on SophieBot", you will need to call an API to get people working on "SophieBot" project, so you need to mark "SophieBot" as an entity.

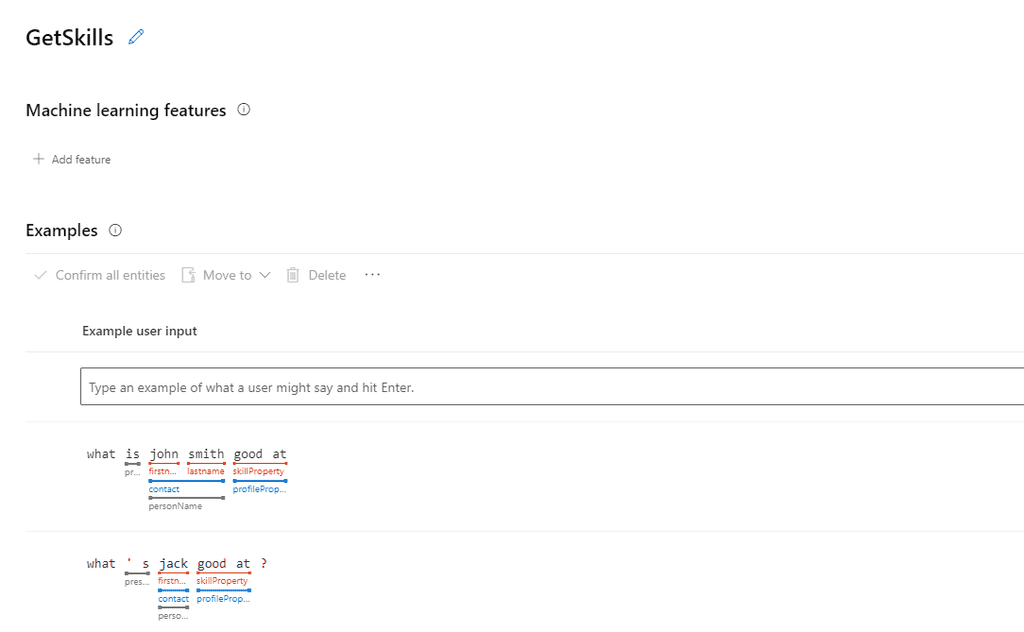

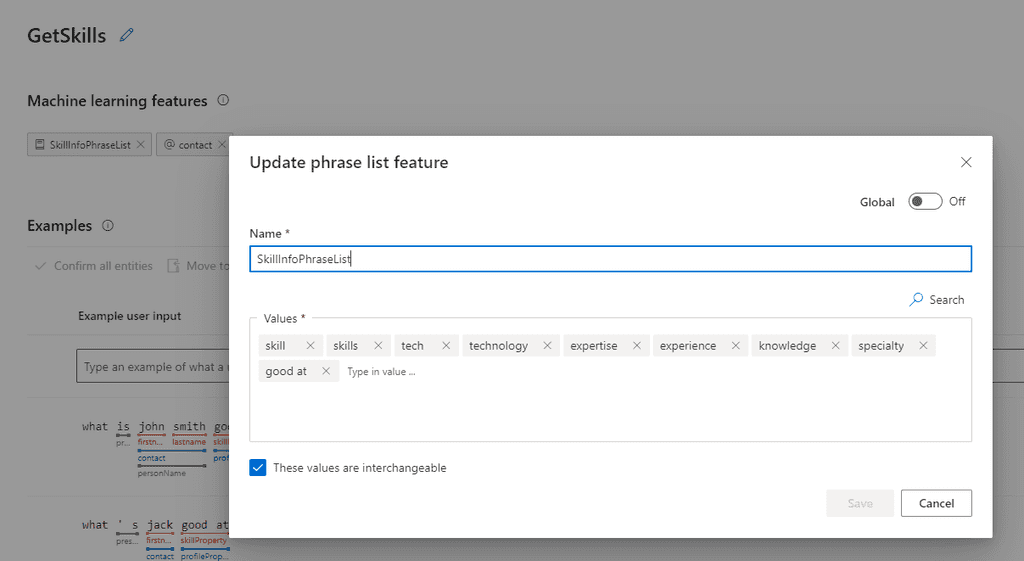

Features

As your LUIS model grows, it's possible that certain intents have similar user utterances. For example, "What's Adam's skills" has a very similar format to "What's Adam's mobile", so LUIS might think "What's Adam's mobile" is a "What's Adam's skills" intent.

So you need a way to define what phrases have the same meaning as "mobile" and what phrases have the same meaning as "skills". Phrase list features let you do this. For example, "mobile" may have a phrase list feature containing "mobile", "phone number", "telephone number" etc.

Best practices

In order to make LUIS' recognition more precise, some of the best practises are:

-

Do define distinct intents

-

Do assign features for intents

-

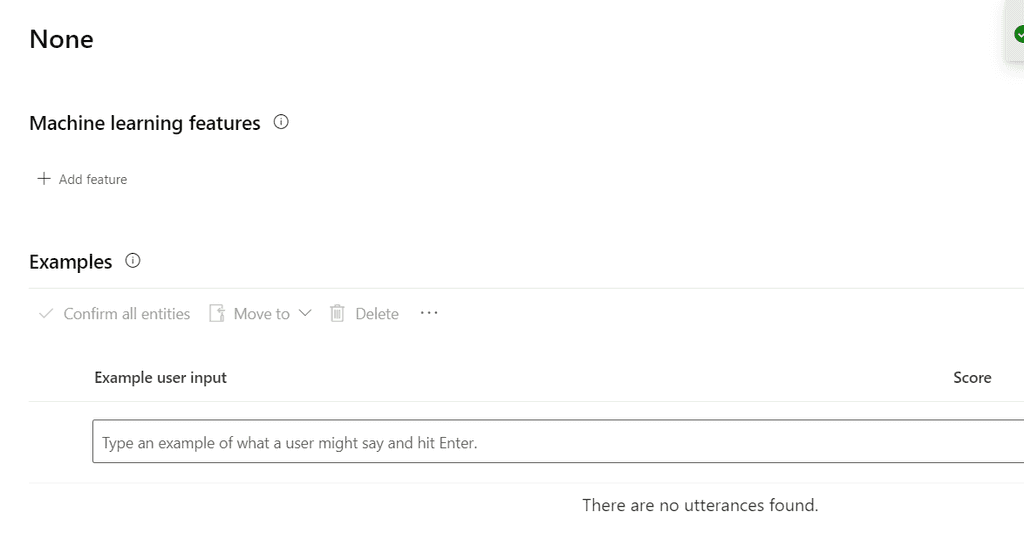

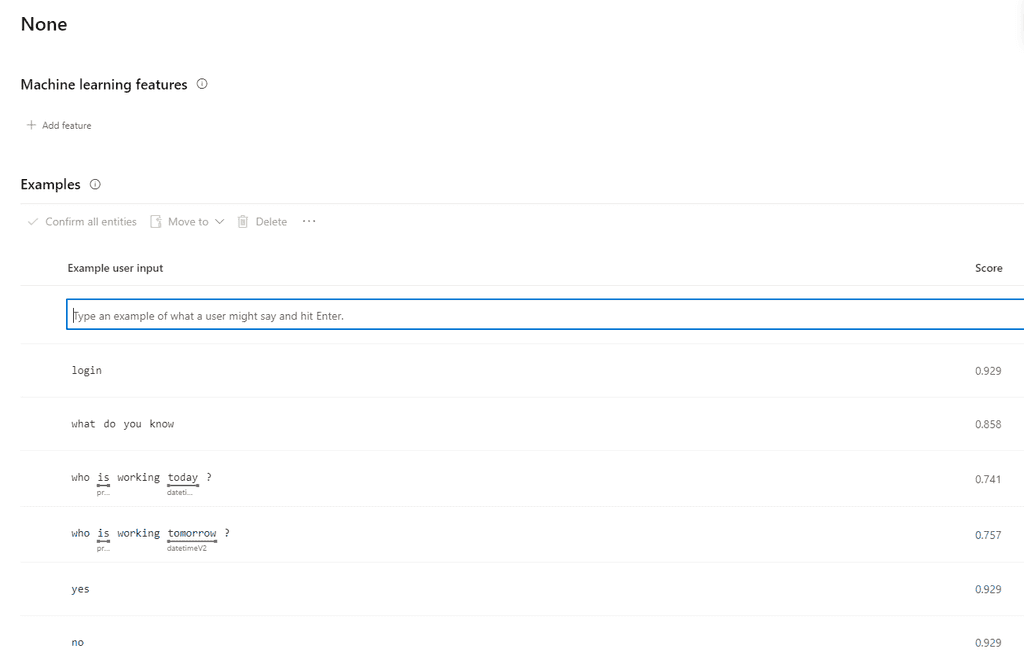

Do add examples to None intent (the fallback intent if LUIS doesn't recognize the user input as any intent)

If you are using Microsoft Bot Framework and Bot Framework Emulator, you can't preview what the card UI looks like in other platforms (e.g. Teams) until you deploy to production. Adaptive Cards Designer helps you solve this problem by providing some awesome features...

- Online editing

- Multi-platform preview

There are two ways to use Adaptive Cards Designer:

- Using online version

- Using Adaptive Cards Designer SDK to embed into your application

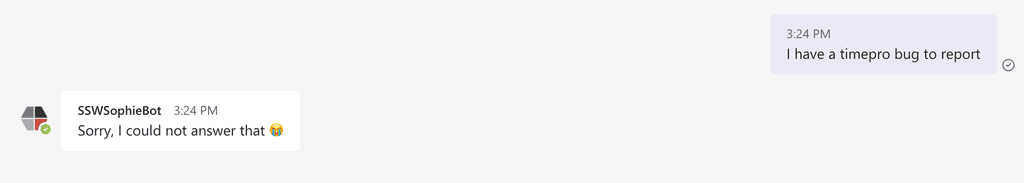

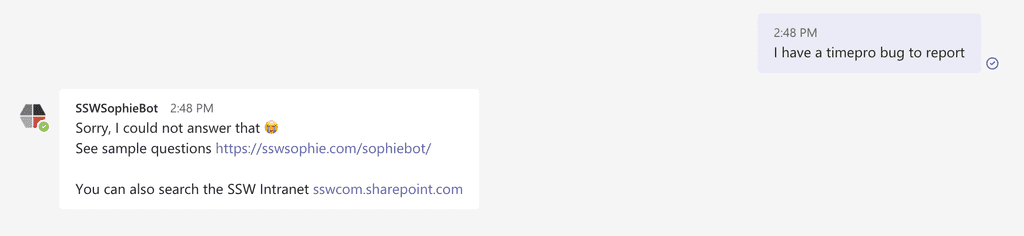

Sometimes when a user asks a question that the bot doesn't know the answer to, they get an unhelpful response and no further options. Instead, it should give them some further options for how to find the information...

With the advent of Microsoft Flow and Logic Apps, automated emails are becoming more common. And in fact any reminder or notification email you find yourself sending regularly should probably be automated.

However, the end user should be able to tell that this was sent by a bot, and not a real person, both for transparency, and also to potentially trigger them to automate some of their own workflow.

To: SSWAll Subject: It's SSW.Shorts Day! Hi All

The maximum temperature for today is 32.

Feel free to wear shorts if you like 🌞 (hope you haven't skipped leg day 😜)

If you decide against it, then don't forget the SSW dress code applies as usual (e.g. dress up on Monday and Tuesday - no jeans).

Today's forecast: Patchy fog early this morning, mostly in the south, then sunny. Light winds becoming northwesterly 15 to 25 km/h in the afternoon.

Uly --Powered by SSW.Shorts

<This email was sent as per the rule: https://ssw.com.au/rules/do-you-have-a-dress-code>

Figure: Good example – You can clearly see this was sent by a bot

When designing your Bot, you will very likely leverage some Bot Frameworks or AI Services to take care of the Natural Language Processing (NLP) so that you can focus on implementing your business logic.

To host your business logic, it is common to use serverless applications such as Azure Function, Google Firebase or Amazon Web Service Lambda Functions. Serverless applications come with amazing scaling abilities and simplified programming models, but they also suffer from at least one well-known side-effect that is commonly referred to as Cold Starts.

Here are some recommended solutions to eliminate Cold Starts:

Microsoft Azure Functions

- Add warm-up request

Use a timer trigger function to keep the Azure Functions application warm. If you know that at a certain time of a day the Function App is likely to be cold and so you wake it up just before you expect users to send out requests. - Move to App Service Plan

Azure Functions can be hosted using dedicated App Service Plans instead of the serverless Consumption plan. Now you no longer need to worry about cold starts since the compute resource is always available and ready. The only caveat is that the Azure Functions won't automatically scale out as they do with the Consumption plan. So, think ahead and measure your compute requirements, and make the right decision. - Upgrade to Premium Plan

If you want a dedicated instance that is always available and ready, and you also require the ability to automatically scale out under high try the Azure Functions Premium Plan (preview). The premium plan always has at least 1 core ready to process requests and your application will not suffer from cold starts. The premium plan does come at a price, so plan ahead.

Firebase Functions on Google Cloud Platform

- In Node.js code, export all the functions your want to deploy to cloud functions. And import / require dependencies inside the function. So that each function call will only load the dependency it needs instead of loading all dependencies in the index.ts file.

- Warm up request - Create a separate function that works on a timer. The function can run at some time interval that you know your app will not be cold.

- If cold starts are unbearable, convert to other infrastructure such as App Engine .

Amazon Web Service

- Instead of using statically typed programming languages like Java and C#, you may prefer dynamically typed languages like Python, Node.js etc.

- Avoid deploying Lambdas in Amazon Virtual Private Cloud (VPC).

- Avoid making HTTPS or TCP client calls inside your lambda. Handshake or other security related calls are CPU bound and will increase the cold starts to your function.

- Send dummy requests to your functions with some frequency.

- Make necessary changes on your Lambda to distinguish warm-up calls from customer calls.

-

Be mindful about the potential drawbacks of the warm-up requests

- Warm-up calls will potentially keep all your containers busy and a real customer request could not find a place to run

- A Lambda function in one container can catch all calls at once and this can make other containers down after a while

- Add warm-up request