Rules to Better Continuous Deployment - 15 Rules

If you still need help, visit Application Lifecycle Management and book in a consultant.

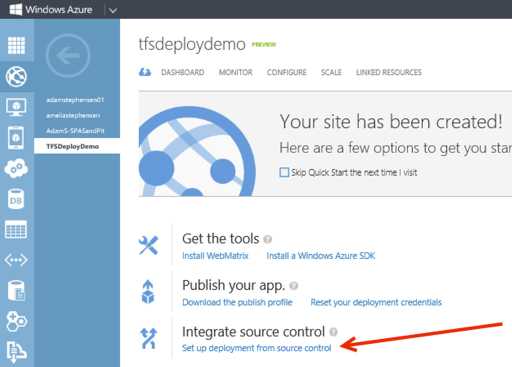

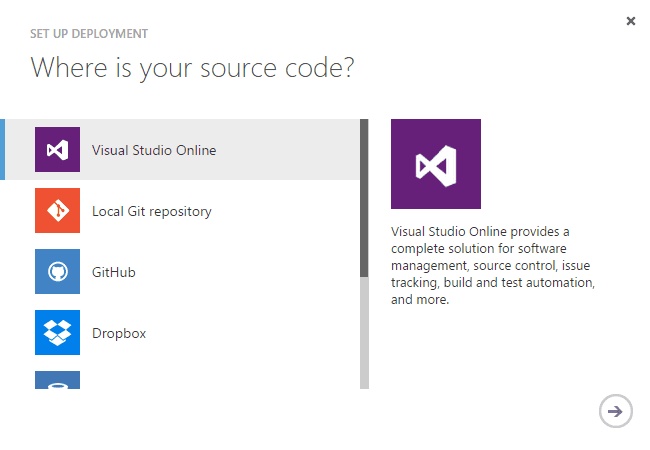

TFS and Windows Azure work wonderfully together. It only takes a minute to configure continuous deployment from Visual Studio Online (visualstudio.com) to a Windows Azure website or Cloud Service.

This is by far the most simple method to achieve continuous deployment of your websites to Azure.But, if your application is more complicated, or you need to run UI tests as part of your deployment, you should be using Octopus Deploy instead according to the Do you use the best deployment tool rule.

Figure: Setting up deployment from source control is simple from within the Azure portal

Figure: Deployment is available from a number of different source control repositories Suggestion to Microsoft: We hope this functionality comes to on-premise TFS and IIS configurations in the next version.

Often, deployment is either done manually or as part of the build process. But deployment is a completely different step in your lifecycle. It's important that deployment is automated, but done separately from the build process.

There are two main reasons you should separate your deployment from your build process:

- You're not dependent on your servers for your build to succeed. Similarly, if you need to change deployment locations, or add or remove servers, you don't have to edit your build definition and risk breaking your build.

- You want to make sure you're deploying the *same* (tested) build of your software to each environment. If your deployment step is part of your build step, you may be rebuilding each time you deploy to a new environment.

The best tool for deployments is Octopus Deploy.

Octopus Deploy allows you to package your projects in Nuget packages, publish them to the Octopus server, and deploy the package to your configured environments. Advanced users can also perform other tasks as part of a deployment like running integration and smoke tests, or notifying third-party services of a successful deployment.

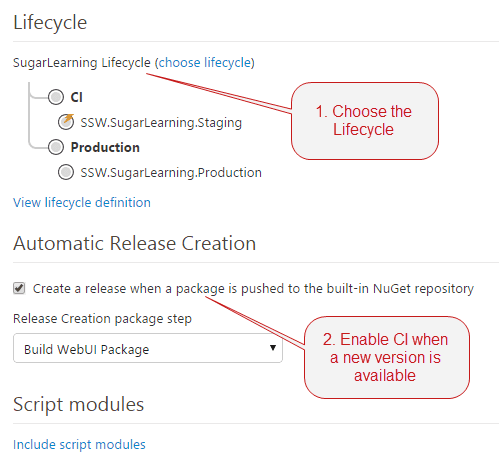

Version 2.6 of Octopus Deploy introduced the ability to create a new release and trigger a deployment when a new package is pushed to the Octopus server. Combined with Octopack, this makes continuous integration very easy from Team Foundation Server.

What if you need to sync files manually?

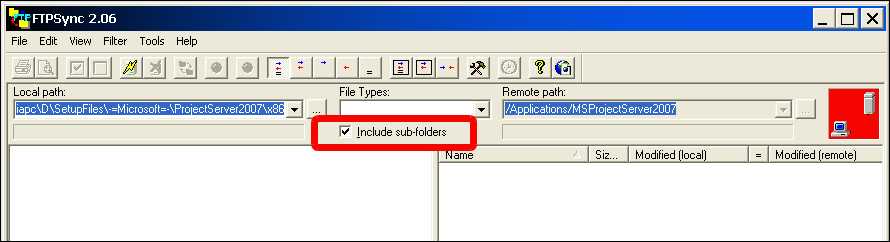

Then you should use an FTP client, which allows you to update files you have changed. FTP Sync and Beyond Compare are recommended as they compare all the files on the web server to a directory on a local machine, including date updated, file size and report which file is newer and what files will be overridden by uploading or downloading. you should only make changes on the local machine, so we can always upload files from the local machine to the web server.

This process allows you to keep a local copy of your live website on your machine - a great backup as a side effect.

Whenever you make changes on the website, as soon as they are approved they will be uploaded. You should tick the box that says "sync sub-folders", but when you click sync be careful to check any files that may be marked for a reverse sync. You should reverse the direction on these files. For most general editing tasks, changes should be uploaded as soon as they are done. Don't leave it until the end of the day. You won't be able to remember what pages you've changed. And when you upload a file, you should sync EVERY file in that directory. It's highly likely that un-synced files have been changed by someone, and forgotten to be uploaded. And make sure that deleted folders in the local server are deleted in the remote server.

If you are working on some files that you do not want to sync then put a _DoNotSyncFilesInThisFolder_XX.txt file in the folder. (Replace XX with your initials.) So if you see files that are to be synced (and you don't see this file) then find out who did it and tell them to sync. The reason you have this TXT file is so that people don't keep telling the web

NOTE: Immediately before deployment of an ASP.NET application with FTP Sync, you should ensure that the application compiles - otherwise it will not work correctly on the destination server (even though it still works on the development server).

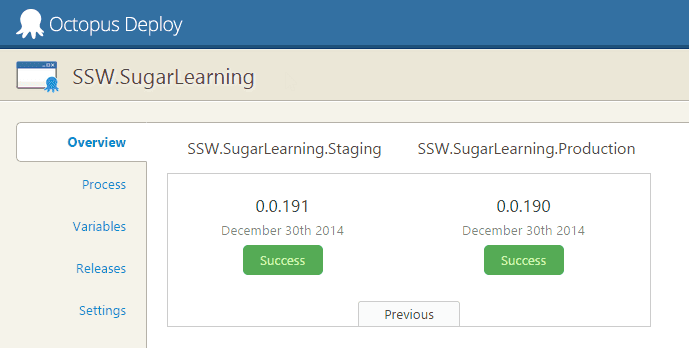

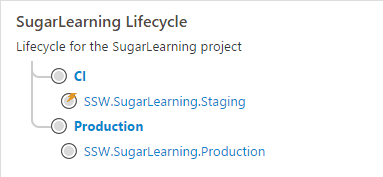

Octopus Deploy 2.6 introduced a new Lifecycles feature that makes Continuous Integration from TFS much easier. It's a must have for projects in TFS that use Octopus for deployment.

As well as allowing continuous integration, the Lifecycles feature adds some good governance around when a project can be deployed to each environment.

Lifecycles can be found in the Library section of Octopus Deploy. By default, a project will use the Default Lifecycle which allows any deployment at any time.

Figure: Lifecycles can be found in the Library You should create a new Lifecycle for each project you've configured with Octopus Deploy. You should set up a phase to continuously deploy to your first environment (e.g. test or staging), but make sure the final phase of the lifecycle is a manual step to production.

Figure: Good Example - This lifecycle has two phases: an automatic release to a Staging server, and a manual release to the Production server. In the Process tab of your project definition, there's a panel on the right-hand side that lets you configure the Lifecycle to use. You should also enable Automatic Release Creation. If you have a CI build which publishes a new package to the Octopus NuGet feed as part of your build using OctoPack, and your first Lifecycle phase is automatic, this will result in continuous deployment to your CI environment.

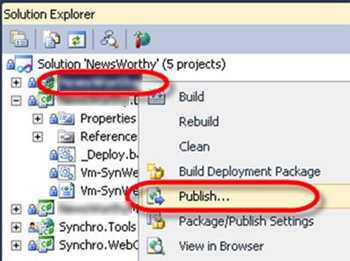

Publishing from Visual Studio is a convenient way to deploy a web application, but it relies on a single developer’s machine which can lead to problems. Deploying to production should be easily repeatable, and able to be performed from different machines.

A better way to deploy is by using a defined Build in TFS.

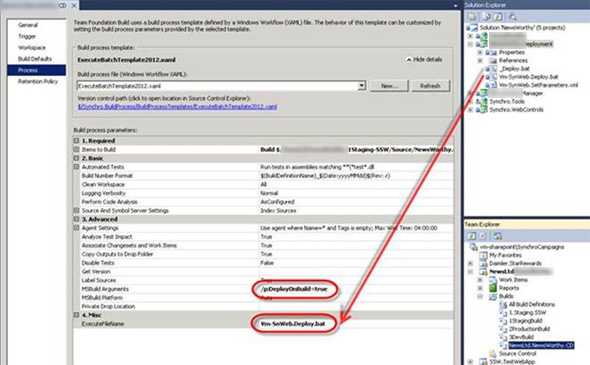

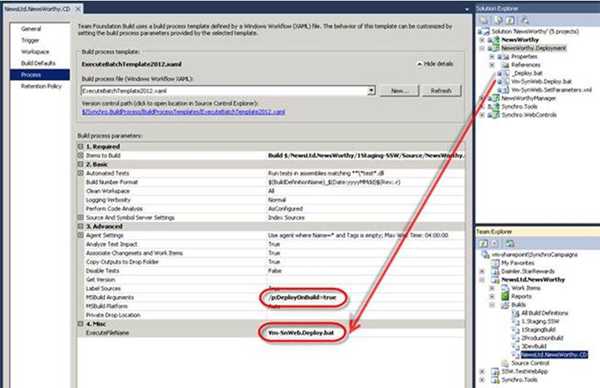

Configure the ExecuteBatchTemplate Build Process Template.

Figure: Enter the DeployOnBuild MsBuild argument, and then enter the name of the deployment batch file you wish to execute upon successful build of the project. Every time this build is executed successful (and all the unit tests pass), the specified batch file will run – deploying the site automatically. Your source control repository should be the source of all truth. Everything, always, no-matter what should go into source control.

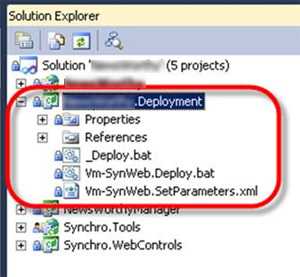

This includes any deployment scripts and Web Deploy parameter files if you need them.

This includes your deployment scripts and Web Deploy parameter files.

In the image aboce, Vm-SynWeb.Deploy.Bat is a batch file that will deploy your website to Vm-SynWebVm-SynWeb.SetParameters.xml is a Web Deploy SetParameters file that specifies environment specific settings._Deploy.Bat is the base batch file that your environment specific deployment batch files will call.

Figure: It is important that each of the batch and parameters files has it ‘Copy to Output Directory’ setting set to ‘Copy Always’ (Before you configure continuous deployment) You need to ensure that the code that you have on the server compiles. A successful CI build without deployment lets you know the solution will compile.

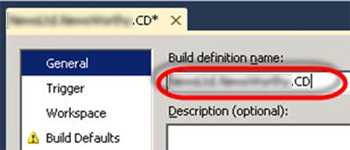

Figure: The Build definition name should include the project name. The reason for this is that builds for all solutions are placed in the same folder, and including the build name makes the Build Drop folder organised

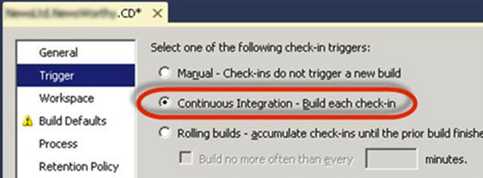

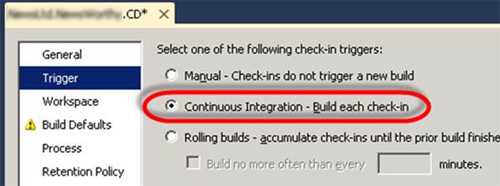

Figure: On the Trigger tab choose Continuous Integration. This ensures that each check-in results in a build

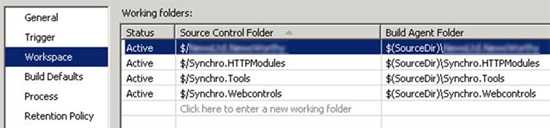

Figure: On the Workspace tab you need to include all source control folders that are required for the build

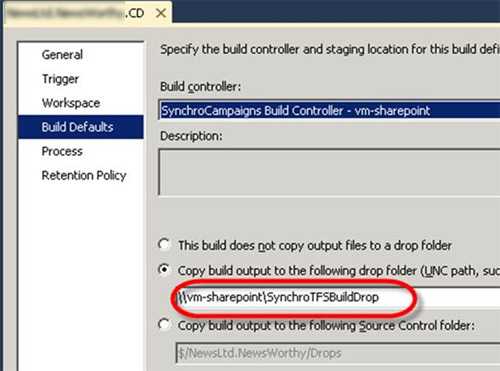

Figure: Enter the path to your Drop Folder (where you drop your builds)

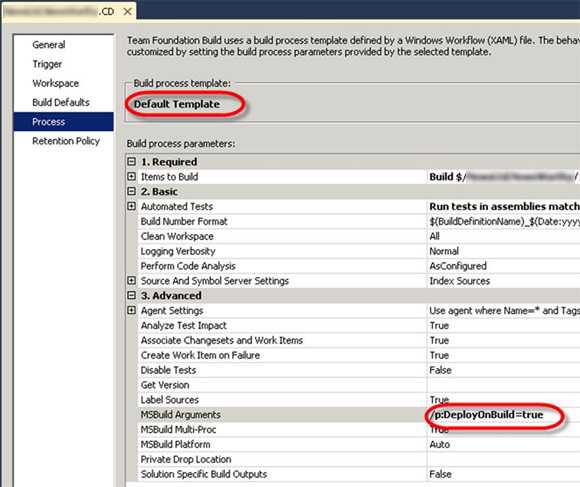

Figure: Choose the Default Build template and enter the DeployOnBuild argument to the MSBuild Arguments parameter of the build template

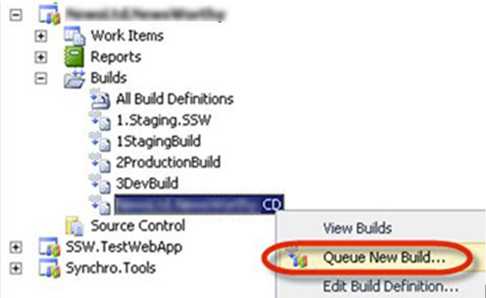

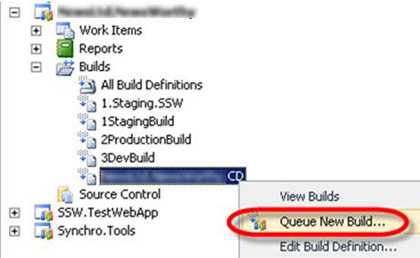

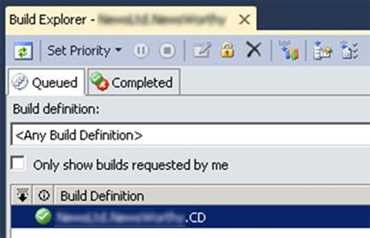

Figure: Queue a build, to ensure our CI build is working correctly

Figure: Before we setup continuous deployment it is important to get a successful basic CI build TODO: MattW - View GitHub issue

If you use Azure websites and VisualStudio.com you can set up continuously deployment in 5 minutes.

If you are using TFS 2012 on premise, or are not using Windows Azure websites, follow the steps below to configure continuous deployment.

As you can see, there are a lot of steps and you will need at least a day to get it all right.

In theory WebDeploy can create a site for you when you deploy. The issue with this is that many settings are assumed.

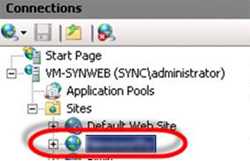

Always create the site before deploying to it, so that you can specify the exactly the settings that you desire. E.g. the directory where you want the files for the site to be saved, the app pool to use and the version of .Net.

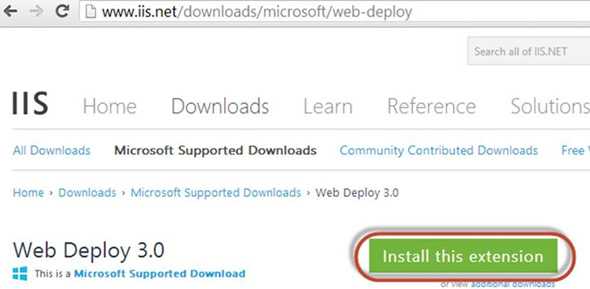

Figure: Create the website in IIS You should not Install Web Deploy from the Web Platform Installer, but instead download the installation from the IIS website (http://www.iis.net/downloads/microsoft/web-deploy).

The reason for this is that the Web Platform Installer does not install all of the components required for continuous deployment, but the downloaded package does.

More information on this issue here: Don't Install Web Deployment Tool using the Web Platform Installer

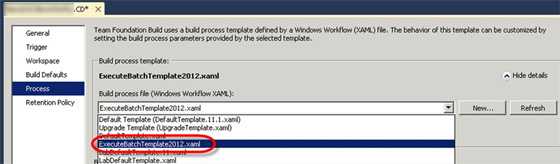

Update your Build to use the ExecuteBatchTemplate Build Process Template.

Figure: If the ExecuteBatchTemplate is available in the dropdownlist on the Process tab, select it and continue in the next section

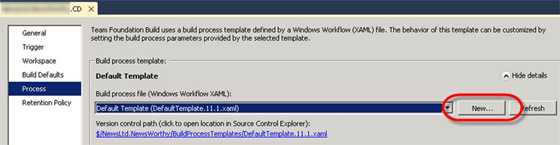

Figure: If the ExecuteBatchTemplate is not available in the dropdown list, click the New button

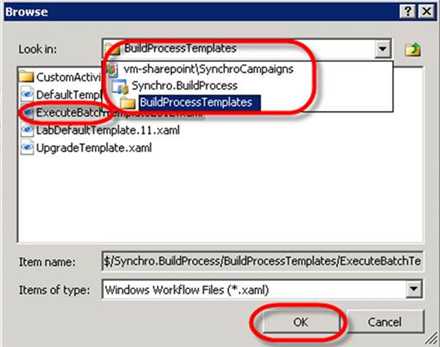

Figure: Select the Browse button to browse source control for the correct build process template

Figure: Navigate to the \BuildProcessTemplates\ folder and then select the ExecuteBatchUpdate template. Click "OK" Ideally, Builds are created once, and can then be deployed to any environment, at any point in time (Build Once, Deploy Many).We do this by including deployment batch files in the solution, and specifying them to be called in the Build Process Template.

Figure: Good example - Include deployment scripts in the solution, and execute them from the Build Process Template ❌ Bad example - Using Builds to Deploy ✅ Good example - Using Batch File Deployment Overview 1. A separate build is created per target environment

2. The MS Deploy parameters are put into the MSBuild parameters setting on the process template

3. The build for the shared development server is set to be a CI build so it is executed on every check-in1. One batch file per target environment is created and checked into source control alongside the web project

2. Each batch file is accompanied by a corresponding Web Deploy Parameterisation XML file with environment specific settings

3. The build process template is modified to call the batch file to continuously deploy to the shared development serverDeployment Process 1. The build is automatically deployed to the shared dev server

2. Lots of testing occurs and we decide to deploy to staging

3. We can just kick off the staging build

4. A whole lot of testing occurs and we want to deploy to production

5. We can kick off a production build, but this will deploy the latest source code to production

6. If we want to deploy the version of the software that we have deployed to staging we have to get that specific version from source control, and then do a production build of it1. The build is automatically deployed to the shared dev server

2. Lots of testing occurs and we decide to deploy to staging

3. The batch file for any build can be executed and the build deployed to staging

4. A whole lot of testing occurs on staging and then we decide to deploy the same build to production

Note: We just call the batch file in the folder to do the deployment. No new build is required👍 Benefits 1. No need to create batch files or modify the process template 1. Builds are created once, and can then be deployed many times to any environment, at any point in time (Build Once, Deploy Many)

2. When deploying to production, we use exactly the same build package as was used to deploy to staging

3. The custom build process template only does the deployment if the build succeeds and all the unit tests pass

4. Anyone with access to the batch file can deploy… including the Product Owner!

5. You only need one build per project👎 Cons 1. Without modifying the build process template, the build will deploy even if the unit tests fail

2. To deploy a specific build to a particular server, it is necessary to get the code from source control, and then do a build

3. Only developers can deploy

4. You need a build per environment for each project

5. Build Once, Deploy Once. (You can't redeploy a build to a different environment)1. You must customize the build process template to execute the specified batch file from the build folder

2. We have to create custom batch filesTODO: AdamS - Include the steps to customize the build process template.

The Web Platform Installer is great, but does not install all the Web Deploy 3.0 components required for continuous deployment.

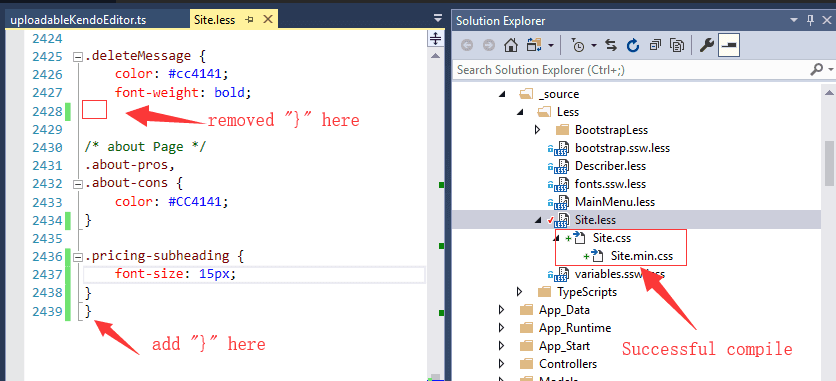

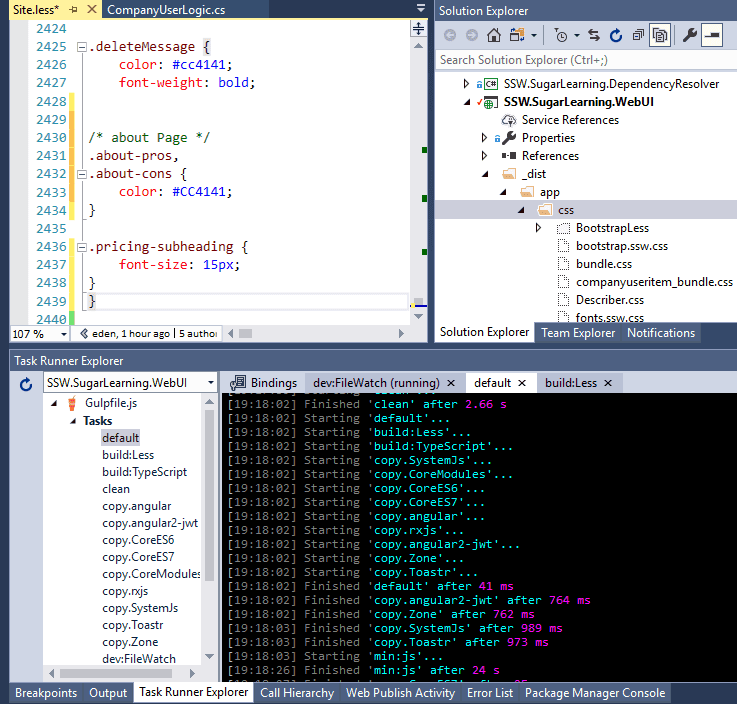

You can use Visual Studio's Web Compiler extension to create a bundle.css and test if CSS was compiled successfully.

More information and download at Visual Studio Marketplace.

Unfortunately different kinds of errors, like are not caught.

In addition, Gulp is wrongly successful too:

In the Face of Armageddon

When there's a major error during deployment or a catastrophic fault happens, what is your first instinct? Is it to hit that Roll Back button?

It feels like reverting back to the previous stable version is the safest and quickest way to restore functionality.

Figure: Fixing Problems However, rolling back can have several negative consequences, making it crucial to consider the drawbacks:

- Negation of new features and improvements

- Cycle of constant reversion

- Resource-intensive and disruptive

- Causes downtime and requires manual intervention

From Setback to Comeback

To support making decisions, developers should set up robust monitoring systems. Effective monitoring ensures accurate and prompt issue identification, aiding informed decision-making. This is particularly important when it comes making the decision to Roll Forward.

Here are the reasons why you should Roll Forward:

- Continuous Improvement: Rolling forward allows for incremental improvements, ensuring that any issues are promptly addressed without hindering overall progress.

- Minimized Downtime: By fixing issues on the go rather than reverting to previous versions, Rolling Forward reduces system downtime and minimizes disruptions to business operations.

- Data Integrity: Changes that minimally impact user data or schema allow for seamless updates without risking data integrity. Rolling Forward ensures that data remains intact and consistent.

- Customer Confidence: Demonstrating a commitment to Rolling Forward and resolving issues promptly can build customer confidence and trust in your ability to deliver reliable and up-to-date solutions.

By prioritizing rolling forward, you embrace a proactive approach that promotes resilience, agility, and continuous delivery.

Prevention is Better Than Cure

To keep your deployments stress free, do the following:

- Set up Application Insights

- Write Unit Tests

- Understand the different types of testing

- Set up a production-like environment

- Manage Feature Flags

If issues occur, rolling forward with targeted fixes is the best way to maintain progress and stability.