Rules to Better Unit Tests - 23 Rules

Enhance your software quality with these essential rules for unit testing. From understanding the importance of unit tests to leveraging effective testing tools, these guidelines will help you create robust tests, isolate your logic, and ensure your applications perform as expected.

Customers get cranky when developers make a change which causes a bug to pop up somewhere else. One way to reduce the risk of such bugs occurring is to build a solid foundation of unit tests.

When writing code, one of the most time-consuming and frustrating parts of the process is finding that something you have changed has broken something you're not looking at. These bugs often don't get found for a long time, as they are not the focus of the "test please" and only get found later by clients.

Customers may also complain that they shouldn't have to pay for this new bug to be fixed. Although this is understandable, fixing bugs is a large part of the project and is always billable. However, if these bugs can be caught early, they are generally easier, quicker and cheaper to fix.

Unit tests double-check that your core logic is still working every time you compile, helping to minimize the number of bugs that get through your internal testing and end up being found by the client.

Think of this is a "Pay now, pay much less later" approach.

Some people aim for 100% Unit Test Coverage but, in the real world, this is 100% impractical. Actually, it seems that the most popular metric in TDD (Test-Driven Development) is to aim for 100% of the methods to be unit tested. However, in practice, this goal is rarely achieved.

Unit tests are created to validate and assert that public and protected methods of a class meet an expected outcome based on varying input. This includes both good and bad data being tested, to ensure the method behaves as expected and returns the correct result or traps any errors.

Tip: Don't focus on meeting a unit test coverage target. Focus on quality over quantity.

Remember that unit tests are designed to be small in scope and help mitigate the risk of code changes. When deciding which unit tests to write, think about the risks you're mitigating by writing them. In other words, don't write unit tests for the sake of it, but write them where it makes sense and where they actually help to reduce the risk of unintended consequences of code changes.

✅ Unit tests should be written for:

- Critical path

- Core functionality

- Fragile code - E.g. regular expressions

- When errors can be difficult to spot - E.g. in rounding, arithmetic and other calculations

Examples:

- In a calculation, you would not only test correct input (such as 12/3 = 4) and bad output (such as 12/4 <> 4), but also that 12/0 does not crash the application (instead a DivideByZero exception is expected and should be handled gracefully).

- Methods returning a Boolean value need to have test cases to cover both true and false.

❌ Unit tests should not be written for:

- Dependencies - E.g. database schemas, datasets, Web Services, DLLs runtime errors (JIT)

- Performance - E.g. slow forms, time-critical applications

- Generated code - Code that has been generated from Code Generators, e.g. SQL database functions (Customer.Select, Customer.Update, Customer.Insert, Customer.Delete)

- Private methods - Private methods should be tested by the unit tests on the public and protected methods calling them and this will indirectly test that the private method behaves as intended. This helps to reduce maintenance as private methods are likely to be refactored (e.g. changed or renamed) often and would require any unit tests against them to be updated frequently.

It's important that the unit tests you develop are capable of failing and that you have seen it fail. A test that can never fail isn't helpful for anyone.

This is a fundamental principle in Test Driven Development (TDD) called Red/Green/Refactor.

A common approach is by returning

NotImplementedException()from the method you are writing tests for. For Example:[Test] public void ShouldAddTwoNumbers() { var calculator = new Calculator(); var result = calculator.Sum(10, 11); Assert.Equal(21, result); } // The method to test in class Calculator ... public int Sum(int x, int y) { throw new NotImplementedException(); }Bad example: The test fails by throwing a

NotImplementedExceptionThis test fails for the wrong reasons, by throwing a

NotImplementedException. In production, this is not a valid reason for this test to fail. ANotImplementedExceptionis synonymous with "still in development", include a//TODO:marker with some notes about the steps to take to implement the test.A better approach would be to return a value that is invalid.

[Test] public void ShouldCheckIfPositive() { var calculator = new Calculator(); var result = calculator.IsPositive(10); Assert.True(result); } // The method to test in class Calculator ... public int IsPositive(int x) { return -1; }Good example: The test fails by returning an invalid value

Sometimes there is no clear definition of an invalid value, then it is acceptable to fail a test using

NotImplementedException. Add additional remarks, notes or steps on what to test and how to implement with a//TODO: ...marker. This will assist you or other developers coming across this failed test.Make sure that this test will be implemented before a production release.

// The method to test in class Calculator ... public int IsPositive(int x) { //NOTE: ths method has a clear "invalid" value return -1; } public int Sum(int x, int y) { //NOTE: this method does not have a clear "invalid" value and throws a NotImplementedException and includes a TODO marker //TODO: need to implement Sum by adding both operands together using return x + y; throw NotImplementedException(); }Good example: The test fails by returning an invalid result or throwing a

NotImplementedException()with a//TODO:itemIn this case, the test will fail because the

IsPositivebehavior is incorrect andSumis missing its implementation.You should do mutation testing to remove false positive tests and test your test suite to have more confidence. Visit the Wiki for more information about mutation testings.

To perform mutation testing you can use Stryker.NET.

When you encounter a bug in your application you should never let the same bug happen again. The best way to do this is to write a unit test for the bug, see the test fail, then fix the bug and watch the test pass. This is also known as Red-Green-Refactor.

Tip: you can then reply to the bug report with "Done + Added a unit test so it can't happen again"

There are three main frameworks for unit testing. The good news is that they are all acceptable choices:

- They all have test runner packages for running tests directly from Visual Studio

- They all have console-based runners that can run tests as part of a CI/CD pipeline

- They differ slightly in syntax and feature set

xUnit.net – Recommended

xUnit.net is a newer framework – written by the original creator of NUnit v2 to create a more opinionated and restrictive framework to encourage TDD best practice. For example, when running xUnit tests, the class containing the test methods is instantiated separately for each test so that tests cannot share data and can run in parallel.

xUnit.net is currently the most popular framework - and is even used by the .NET Core team.

However, one should note that XUnit is still using the old Assert standard, and should be augmented by a better assertion library, like FluentAssertions or Shouldly.

xUnit.net is the default choice for .NET Core web applications and APIs at SSW.

NUnit

The NUnit project deserves recognition for being the first powerful and open-source unit test framework for the .NET universe – and it’s still a solid choice today.

NUnit has undergone large changes in the last 10 years with its NUnit3 version. The most notable is the Assert Constraints, which is a built-in Fluent Assertion library, allowing you to write readable asserts like

Assert.That(x, Is.EqualTo(42).Within(0.1)). It has also adopted the lifetime scopes of XUnit, but you can choose which one to use.NUnit differs from XUnit in being more flexible and more adaptable versus XUnit being more restrictive and opinionated.

Because NUnit has an open-source .NET UI control for running tests, NUnit is still SSW’s preferred choice for embedding unit tests and a runner UI inside a Windows application.

MSTest

MSTest is Microsoft's testing framework. In the past this was a poor choice as although this was the easiest framework to run from Visual Studio, it was extremely difficult to automate these tests from CI/CD build servers. These problems have been completely solved with .NET Core but for most C# developers this is “too little, too late” and the other unit testing frameworks are now more popular.

Respawn

Respawn is a lightweight utility for cleaning up a database to a known state before running integration tests. It is specifically designed for .NET developers who use C# for testing purposes. By intelligently deleting only the data that has changed, Respawn can dramatically reduce the time it takes to reset a test database to its initial state, making it an efficient tool for improving the speed and reliability of integration tests. Respawn supports SQL Server, PostgreSQL, and MySQL databases.

TestContainers for .NET

Testcontainers for .NET! is a library that enables C# .NET developers to create, manage, and dispose of throwaway instances of database systems or other software components within Docker containers for the purpose of automated testing.

It provides a programmatic API to spin up and tear down containers, ensuring a clean and isolated environment for each test run. Testcontainers supports various containers, including databases like SQL Server, PostgreSQL, and MongoDB, as well as other services like Redis, Kafka, and more, making it a versatile tool for integration testing in a .NET environment.

Mixing test frameworks

Dotnet is a flexible ecosystem, and that also applies to the use of test frameworks. There is nothing preventing you from using multiple different test frameworks in the same project. They will coexist with no pain. That way one can get the best from each, and not be locked-in with something that doesn't allow you to do your job efficiently.

Check out these sources to get an understanding of the role of unit testing in delivering high-quality software:

- Read the Wikipedia Unit test page and learn how to write unit tests on fragile code.

- Read Tim Ottinger & Jeff Langr's FIRST principles of unit tests (Fast, Isolated, Repeatable, Self-validating, Timely) to learn about some common properties of good unit tests.

- Read Bas Dijkstra's Why I think unit testing is the basis of any solid automation strategy article to understand how a good foundation of unit tests helps you with your automation strategy.

- Read Martin Fowler's UnitTest to learn about some different opinions as to what constitutes a "unit".

- Read the short article 100 Percent Unit Test Coverage Is Not Enough by John Ruberto as a cautionary tale about focusing on test coverage over test quality.

- Read the Wikipedia Test-driven development page (optional)

- Read the SSW Rule - Test Please - we never release un-tested code to a client!

A Continuous Integration (CI) server monitors the Source Control repository and, when something changes, it will checkout, build and test the software.

If something goes wrong, notifications are sent out immediately (e.g. via email or Teams) so that the problems can be quickly remedied.

It's all about managing the risk of change

Building and testing the software on each change made to the code helps to reduce the risk of introducing unwanted changes in its functionality without us realising.

The various levels of automated testing that may form part of the CI pipeline (e.g. unit, contract, integration, API, end-to-end) all act as change detectors, so we're alerted to unexpected changes almost as soon as the code that created them is committed to the code repository.

The small change deltas between builds in combination with continuous testing should result in a stable and "known good" state of the codebase at all times.

Tip: Azure DevOps and GitHub both provide online build agents with a free tier to get you started.

Test Projects

Tests typically live in separate projects – and you usually create a project from a template for your chosen test framework.Because your test projects are startup projects (in that they can be independently started), they should target specific .NET runtimes and not just .NET Standard.A unit test project usually targets a single code project.

Project Naming

Integration and unit tests should be kept separate and should be named to clearly distinguish the two.This is to make it easier to run only unit tests on your build server (and this should be possible as unit tests should have no external dependencies).Integration tests require dependencies and often won't run as part of your build process. These should be automated later in the DevOps pipeline.

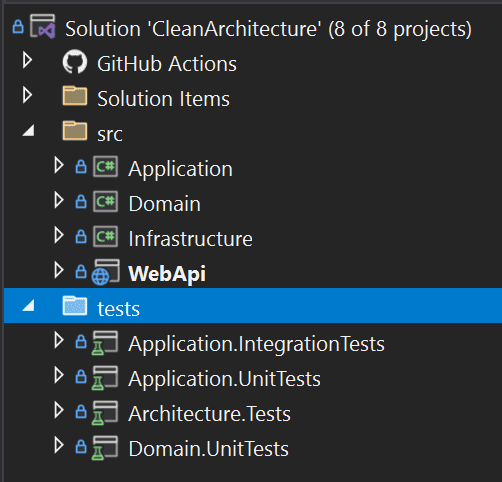

Test Project Location

Test projects can be located either:

- Directly next to the project under test – which makes them easy to find, or

- In a separate "tests" location – which makes it easier to deploy the application without tests included

Figure: In the above project the tests are clearly placed in a separate location, making it easy to deploy to production without them. It’s easy to tell which project is under test and what style of tests will be found in each test project Source: github.com/SSWConsulting/SSW.CleanArchitecture

Naming Conventions for Tests

There are a few “schools of thought” when it comes to naming the tests themselves.Internal consistency within a project is important.It’s usually a bad idea to name tests after the class or method under test – as this naming can quickly get out-of-sync if you use refactoring tools – and one of the key benefits from unit testing is the confidence to refactor!

Remember that descriptive names are useful – but the choice of name is not the developer’s only opportunity to create readable tests.

- Write tests that are easy to read by following the 3 A's (Arrange, Act, and Assert)

- Use a good assertion library to make test failures informative (e.g. FluentAssertions or Shouldly)

- Use comments and refer to bug reports to document the “why” when you have a test for a specific edge-case

- Remember that the F12 shortcut will navigate from the body of your test straight to the method you’re calling

- The point of a naming convention is to make code more readable, not less - so use your judgement and call in others to verify your readability

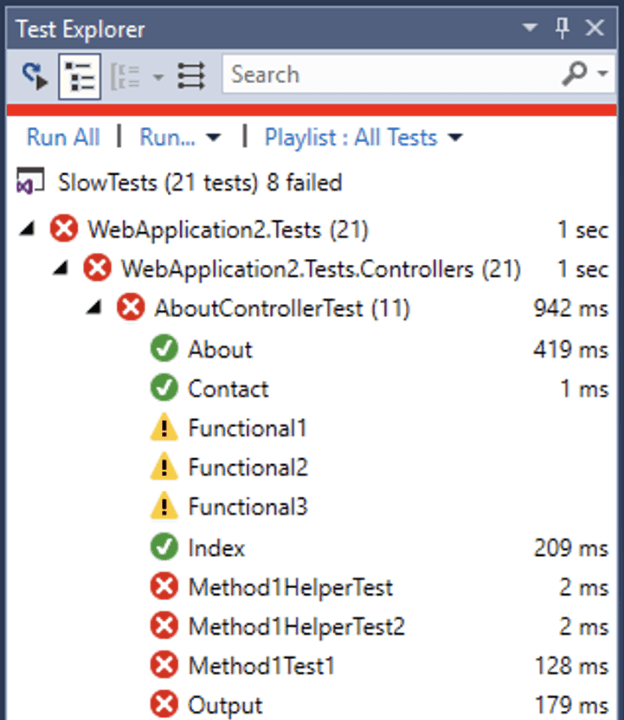

Figure: Bad example - From the Test Explorer view you cannot tell what a test is meant to test just from its name Option 1: [Method/Class]_[Condition]_[ExpectedResult] (Recommended)

[Method/Class]_[Condition]_[ExpectedResult]Figure: The naming convention is effective – it encourages developers to clearly define the expected result upfront without requiring too much verbosity

Think of this as 3 parts, separated by underscores:

- The System Under Test (SUT), typically the method you're testing or the class

- The condition: this might be the input parameters, or the state of the SUT

- The expected result, this might be output of a function, an exception or the state of the SUT after the action

The following test names use the naming convention:

Withdraw_WithInvalidAccount_ThrowsException Checkout_WithCountryAsAustralia_ShouldAdd10PercentTax Purchase_WithBalanceWithinCreditLimit_ShouldSucceedFigure: Good example - Without looking at code, it's clear what the unit tests are trying to do

Option 2: [Given]_[When]_[Then]

[Given]_[When]_[Then]Figure: The naming convention is useful when working with Gherkin statements or BDD style DevOps

Following a Gherkin statement of:

GIVEN I am residing in Australia WHEN I checkout my cart THEN I should be charged 10% tax

This could be written as:

GivenResidingInAustralia_WhenCheckout_ThenCharge10PercentTaxConclusion

Remember, pick what naming method works for your team & organisation's way of working (Do you understand the value of consistency?). Then record it in your team's Architectural Decision Records

Resources

For more reading, the read the Microsoft guidance on Unit testing best practices

A list of other suggested conventions can be found here: 7 Popular Unit Test Naming Conventions.

A test verifies expectations. Traditionally it has the form of 3 major steps:

- Arrange

- Act

- Assert

In the "Arrange" step we get everything ready and make sure we have all things handy for the "Act" step.

The "Act" step executes the relevant code piece that we want to test.

The "Assert" step verifies our expectations by stating what we were expecting from the system under test.

Developers call this the "AAA" syntax.

[TestMethod] public void TestRegisterPost_ValidUser_ReturnsRedirect() { // Arrange AccountController controller = GetAccountController(); RegisterModel model = new RegisterModel() { UserName = "someUser", Email = "goodEmail", Password = "goodPassword", ConfirmPassword = "goodPassword" }; // Act ActionResult result = controller.Register(model); // Assert RedirectToRouteResult redirectResult = (RedirectToRouteResult)result; Assert.AreEqual("Home", redirectResult.RouteValues["controller"]); Assert.AreEqual("Index", redirectResult.RouteValues["action"]); }Figure: A good structure for a unit test

By difficult to spot errors, we mean errors that do not give the user a prompt that an error has occurred. These types of errors are common around arithmetic, rounding and regular expressions, so they should have unit tests written around them.

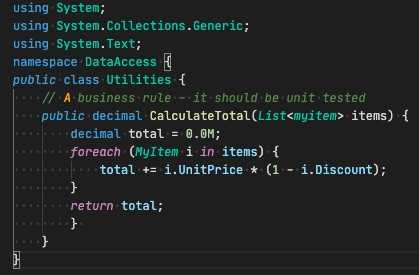

Sample Code:

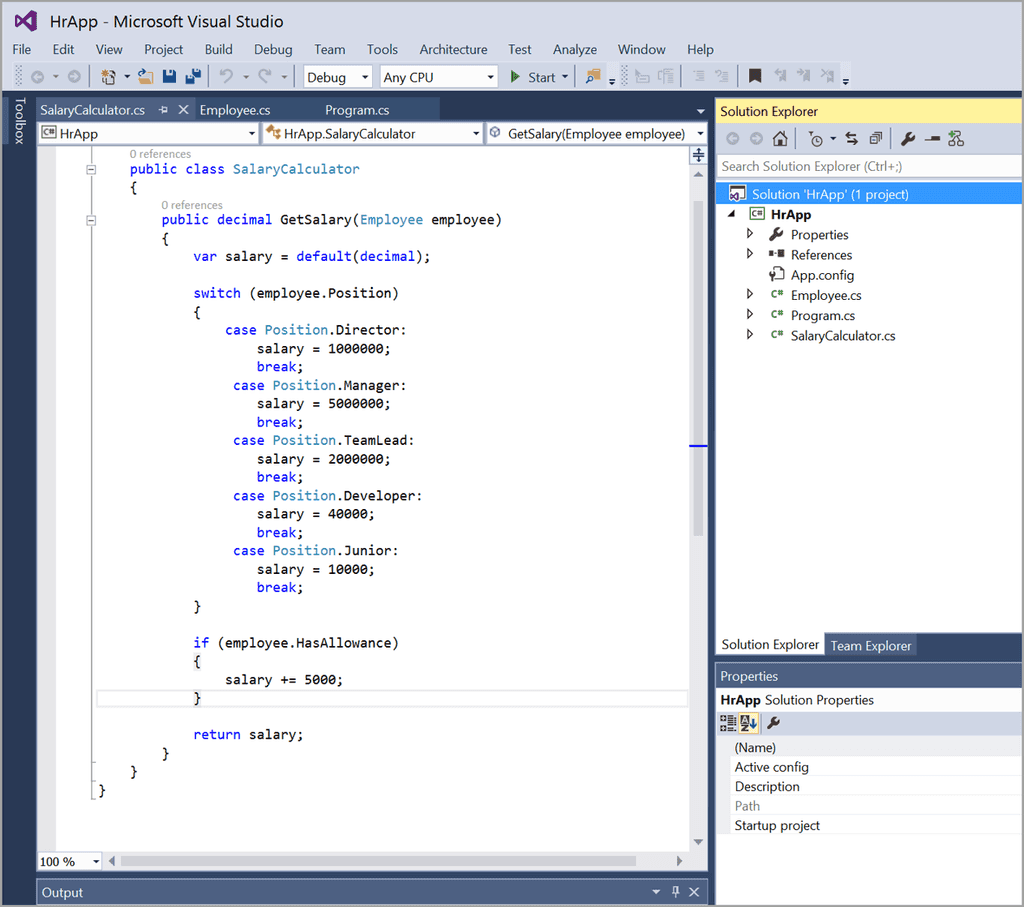

Figure: Function to calculate a total for a list of items

For a function like this, it might be simple to spot errors when there are one or two items. But if you were to calculate the total for 50 items, then the task of spotting an error isn't so easy. This is why a unit test should be written so that you know when the function doesn't work correctly.

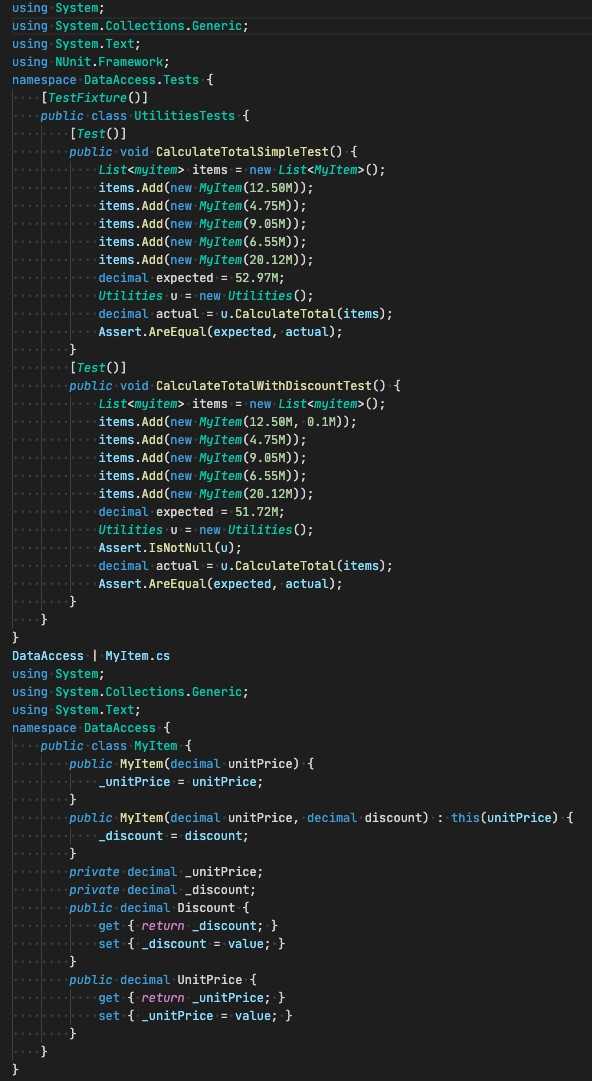

Sample Test: (Note: it doesn't need a failure case because it isn't a regular expression.)

Figure: Test calculates the total by checking something we know the result of.

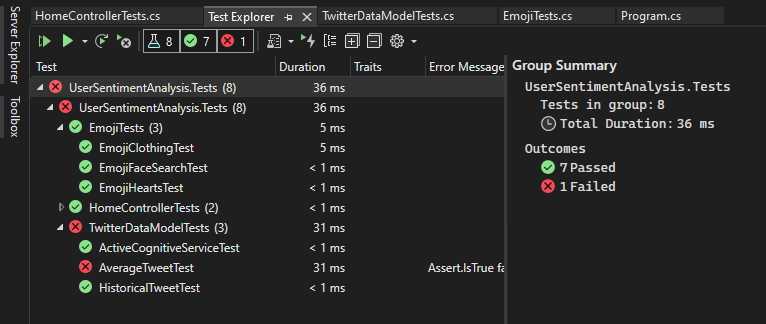

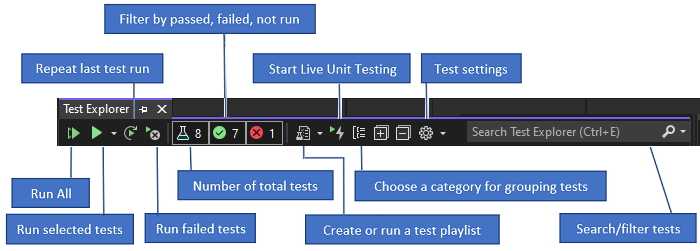

When you build the test project in Visual Studio, the tests appear in Test Explorer. If Test Explorer is not visible, choose Test | Windows | Test Explorer.

Figure: Test Explorer in Visual Studio As you run, write, and rerun your tests, the Test Explorer displays the results in a default grouping of Project, Namespace, and Class. You can change the way the Test Explorer groups your tests.

You can perform much of the work of finding, organizing and running tests from the Test Explorer toolbar.

Figure: Use the Test Explorer toolbar to find, organize and run tests You can run all the tests in the solution, all the tests in a group, or a set of tests that you select:

- To run all the tests in a solution, choose Run All

- To run all the tests in a default group, choose Run and then choose the group on the menu

- Select the individual tests that you want to run, open the context menu for a selected test and then choose Run Selected Tests.

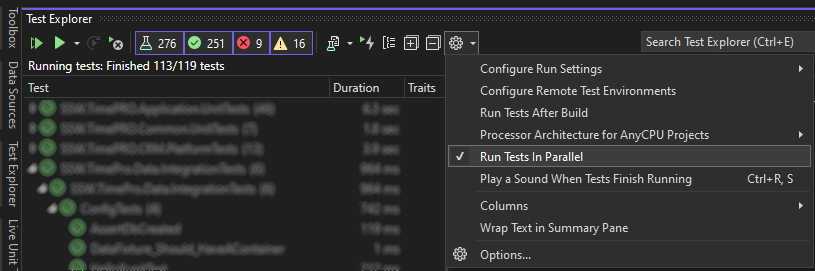

Tip: If individual tests have no dependencies that prevent them from being run in any order, turn on parallel test execution in the settings menu of the toolbar. This can noticeably reduce the time taken to run all the tests.

Figure: turn on "Run Tests In Parallel" to reduce the elapsed time to run all the tests As you run, write and rerun your tests, Test Explorer displays the results in groups of Failed Tests, Passed Tests, Skipped Tests and Not Run Tests. The details pane at the bottom or side of the Test Explorer displays a summary of the test run.

Tip: If you are using dotnet Core/5+, you can run tests from the command line by running dotnet test

If there are complex logic evaluations in your code, we recommend you isolate them and write unit tests for them.

Take this for example:

while ((ActiveThreads > 0 || AssociationsQueued > 0) && (IsRegistered || report.TotalTargets <= 1000 ) && (maxNumPagesToScan == -1 || report.TotalTargets < maxNumPagesToScan) && (!CancelScan))Figure: This complex logic evaluation can't be unit tested

Writing a unit test for this piece of logic is virtually impossible - the only time it is executed is during a scan and there are lots of other things happening at the same time, meaning the unit test will often fail and you won't be able to identify the cause anyway.

We can update this code to make it testable though.

Update the line to this:

while (!HasFinishedInitializing (ActiveThreads, AssociationsQueued, IsRegistered, report.TotalTargets, maxNumPagesToScan, CancelScan))Figure: Isolate the complex logic evaluation

We are using all the same parameters - however, now we are moving the actual logic to a separate method.

Now create the method:

private static bool HasFinishedInitializing(int ActiveThreads, int AssociationsQueued, bool IsRegistered, int TotalAssociations, int MaxNumPagesToScan, bool CancelScan) { return (ActiveThreads > 0 || AssociationsQueued > 0) && (IsRegistered || TotalAssociations <= 1000 ) && (maxNumPagesToScan == -1 || TotalAssociations < maxNumPagesToScan) && (!CancelScan); }Figure: Function of the complex logic evaluation

The critical thing is that everything the method needs to know is passed in, it mustn't go out and get any information for itself and mustn't rely on any other objects being instantiated. In Functional Programming this is called a "Pure Function". A good way to enforce this is to make each of your logic methods static. They have to be completely self-contained.

The other thing we can do now is actually go and simplify / expand out the logic so that it's a bit easier to digest.

public class Initializer { public static bool HasFinishedInitializing( int ActiveThreads, int AssociationsQueued, bool IsRegistered, int TotalAssociations, int MaxNumPagesToScan, bool CancelScan) { // Cancel if (CancelScan) return true; // Only up to 1000 links if it is not a registered version if (!IsRegistered && TotalAssociations > 1000) return true; // Only scan up to the specified number of links if (MaxNumPagesToScan != -1 && TotalAssociations > MaxNumPagesToScan) return true; // Not ActiveThread and the Queue is full if (ActiveThreads <= 0 && AssociationsQueued <= 0) return true; return false; } }Figure: Simplify the complex logic evaluation

The big advantage now is that we can unit test this code easily in a whole range of different scenarios!

public class InitializerTests { [Theory()] [InlineData(2, 20, false, 1200, -1, false, true)] [InlineData(2, 20, true, 1200, -1, false, false)] public void Initialization_Logic_Should_Be_Correctly_Calculated( int activeThreads, int associationsQueued, bool isRegistered, int totalAssociations, int maxNumPagesToScan, bool cancelScan, bool expected) { // Act var result = Initializer.HasFinishedInitializing(activeThreads, associationsQueued, isRegistered, totalAssociations, maxNumPagesToScan, cancelScan); // Assert result.Should().Be(expected, "Initialization logic check failed"); } }Figure: Write a unit test for complex logic evaluation

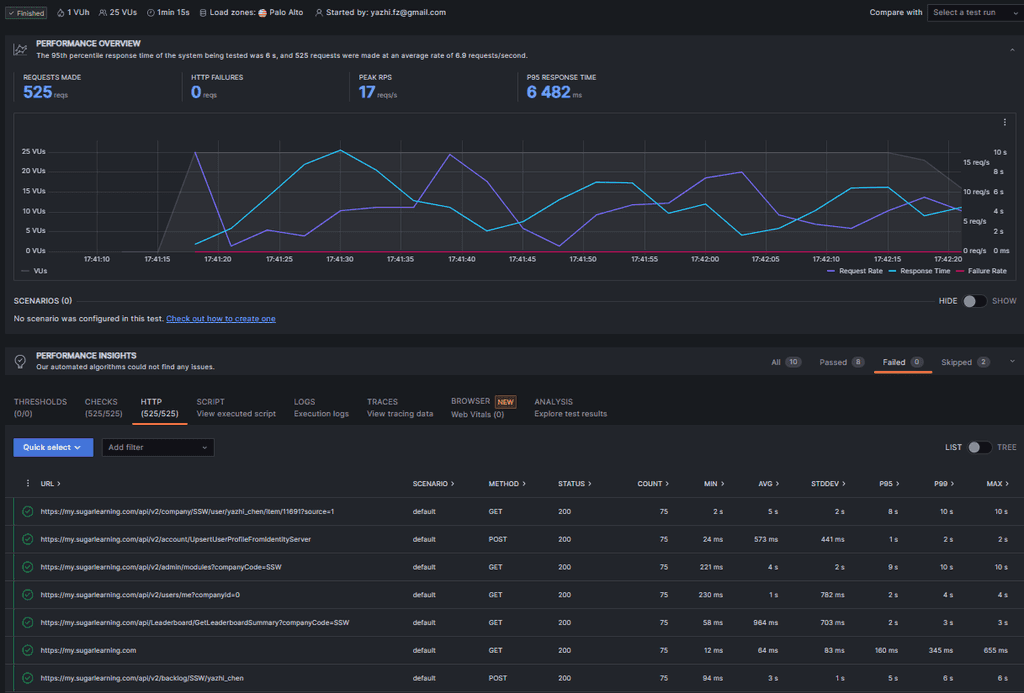

Typically, there are User Acceptance Tests that need to be written to measure the performance of your application. As a general rule of thumb, forms should load in less than 4 seconds. This can be automated with your load testing framework.

Sample Code

import http from 'k6/http'; export const options = { thresholds: { http_req_duration: ['p(100)<4000'], // 100% of requests should be below 4000ms }, }; export default function () { http.get('https://test-api.k6.io/public/mainpage'); }Figure: This code uses k6 to test that the MainPage loads in under 4 seconds

Sometimes, during performance load testing, it becomes necessary to simulate traffic originating from various regions to comprehensively assess system performance. This allows for a more realistic evaluation of how the application or system responds under diverse geographical conditions, reflecting the experiences of users worldwide.

Sample Code:

import http from 'k6/http'; import { check, sleep } from 'k6'; export const options = { vus: 25, //simulates 25 virtual users duration: "60s", //sets the duration of the test ext: { //configuring Load Impact, a cloud-based load testing service. loadimpact: { projectID: 3683064, name: "West US - 25 vus", distribution: { distributionLabel1: { loadZone: 'amazon:us:palo alto', percent: 34 }, distributionLabel2: { loadZone: 'amazon:cn:hong kong', percent: 33 }, distributionLabel3: { loadZone: 'amazon:au:sydney', percent: 33 }, }, }, }, summaryTrendStats: ['avg', 'min', 'max', 'p(95)', 'p(99)', 'p(99.99)'], }; export default function () { const baseUrl = "https://my.sugarlearning.com"; const httpGetPages = [ baseUrl, baseUrl + "/api/Leaderboard/GetLeaderboardSummary?companyCode=SSW", baseUrl + "/api/v2/admin/modules?companyCode=SSW" ]; const token = ''; //set the token here const params = { headers: { 'Content-Type' : 'application/json', Authorization: "Bearer " + token } }; for (const page of httpGetPages){ const res = http.get(page, params); check(res, { 'status was 200': (r) => r.status === 200 }); sleep(1); }; }Figure: This code uses k6 to test several endpoints by simulating traffic from different regions

Some popular open source load testing tools are:

- Apache JMeter - 100% Java application with built in reporting - 6.7k Stars on GitHub

- k6 - Write load tests in javascript - 19.2k Stars on GitHub

- NBomber - Write tests in C# - 1.8k Stars on GitHub

- Bombardier - CLI tool for writing load tests - 3.9k stars on GitHub

- BenchmarkDotNet - A powerful benchmarking tool - 8.8k stars on GitHub

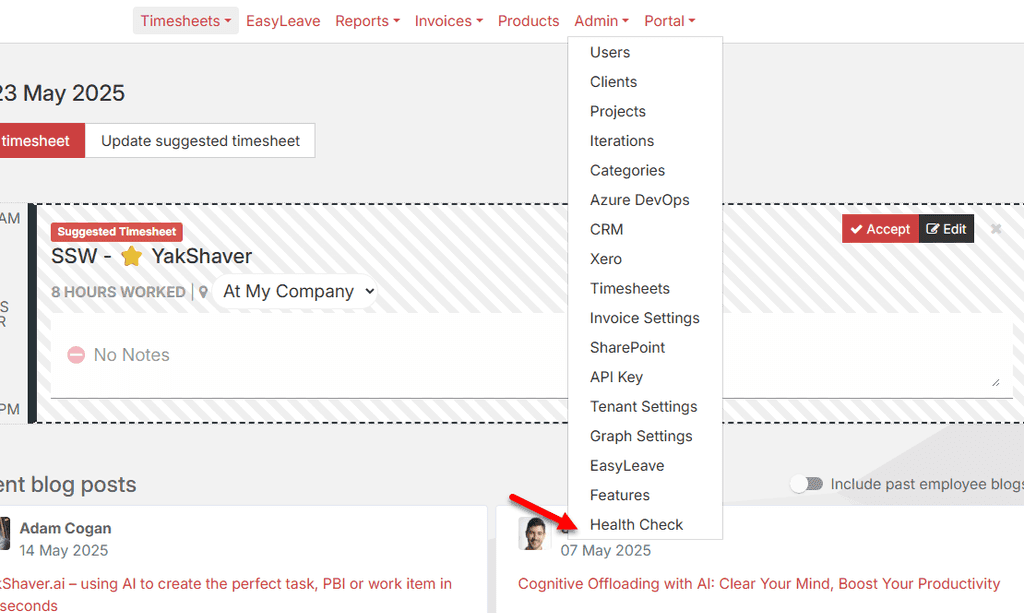

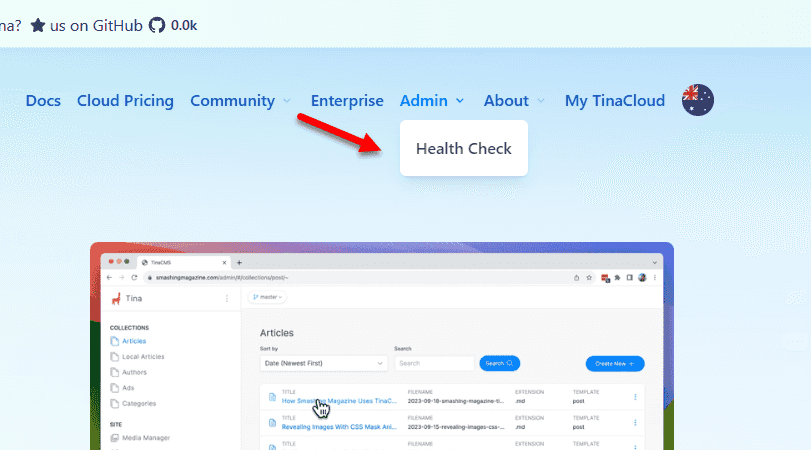

Most developers include health checks for their own applications, but modern solutions are often highly dependent on external cloud infrastructure. When critical services go down, your app could become unresponsive or fail entirely. Ensuring your infrastructure is healthy is just as important as your app.

Figure: Infrastructure Health Checks Your app is only as healthy as its infrastructure

Enterprise applications typically leverage a large number of cloud services; databases, caches, message queues, and more recently LLMs and other cloud-only AI services. These pieces of infrastructure are crucial to the health of your own application, and as such should be given the same care and attention to monitoring as your own code. If any component of your infrastructure fails, your app may not function as expected, potentially leading to outages, performance issues, or degraded user experience.

Monitoring the health of infrastructure services is not just a technical task; it ensures the continuity of business operations and user satisfaction.

Figure: Health Check Infrastructure | Toby Churches | Rules (3 min)Setting Up Health Checks for App & Infrastructure in .NET

To set up health checks in a .NET application, start by configuring the built-in health checks middleware in your Program.cs (or Startup.cs for older versions). Use AddHealthChecks() to monitor core application behavior, and extend it with specific checks for infrastructure services such as databases, Redis, or external APIs using packages like AspNetCore.HealthChecks.SqlServer or AspNetCore.HealthChecks.Redis. This approach ensures your health endpoint reflects the status of both your app and its critical dependencies.

👉 See detailed implementation steps in the video above, and refer to the official Microsoft documentation for further configuration examples and advanced usage

Alerts and responses

Adding comprehensive health checks is great, but if no-one is told about it - what's the point? There are awesome tools available to notify Site Reliability Engineers (SREs) or SysAdmins when something is offline, so make sure your app is set up to use them! For instance, Azure's Azure Monitor Alerts and AWS' CloudWatch provide a suite of configurable options for who, what, when, and how alerts should be fired.

Health check UIs

Depending on your needs, you may want to bake in a health check UI directly into your app. Packages like AspNetCore.HealthChecks.UI make this a breeze, and can often act as your canary in the coalmine. Cloud providers' native status/health pages can take a while to update, so having your own can be a huge timesaver.

Figure: Good example - AspNetCore.HealthChecks.UI gives you a healthcheck dashboard OOTB Tips for Securing Your Health check Endpoints

Keep health check endpoints internal by default to avoid exposing sensitive system data.

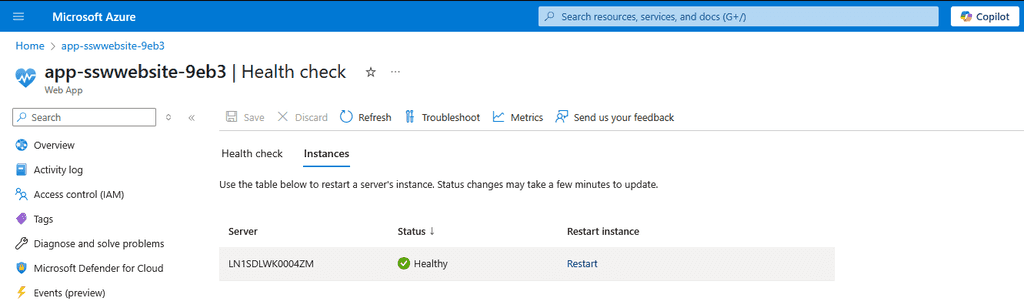

Health Checks in Azure

When deploying apps in Azure it's good practice to enable health checks within the Azure portal. The Azure portal allows you to perform health checks on specific paths for your app service. Azure pings these paths at 1 minute intervals ensuring the response code is between 200 and 299. If 10 consecutive responses with error codes accumulate the app service will be deemed unhealthy and will be replaced with a new instance.

Private Health Check – ✅ Best Practices

- Require authentication (API key, bearer token, etc.)

- (Optional) Restrict access by IP range, VNET, or internal DNS

- Include detailed diagnostics (e.g., database, Redis, third-party services)

- Integrate with internal observability tools like Azure Monitor

- Keep health checks lightweight and fast. Avoid overly complex checks that could increase response times or strain system resources

- Use caching and timeout strategies. To avoid excessive load, health checks can timeout gracefully and cache results to prevent redundant checks under high traffic. See more details on official Microsoft's documentation

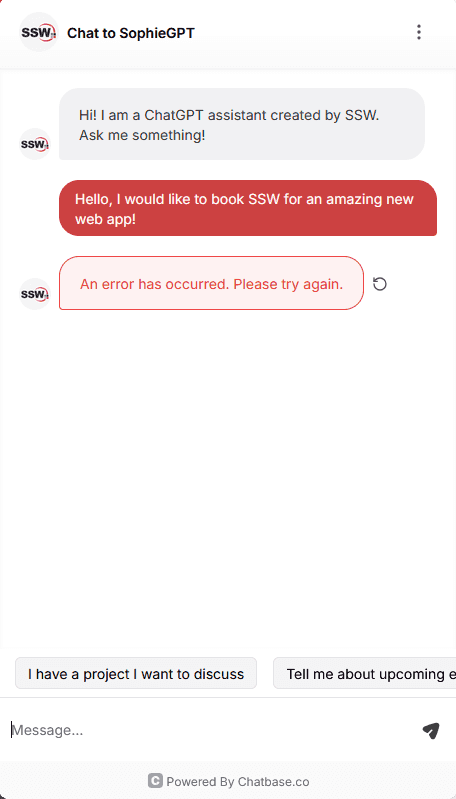

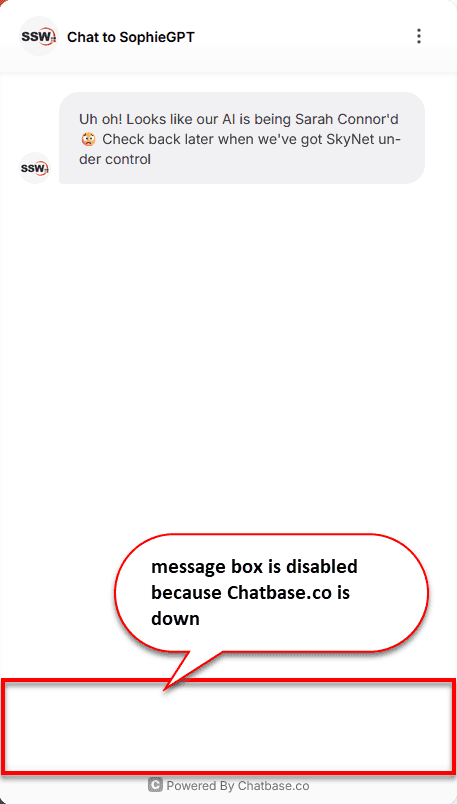

Handle offline infrastructure gracefully

Category Example services Critical Database, Redis cache, authentication service (e.g., Auth0, Azure AD) Non-Critical OpenAI API, email/SMS providers, analytics tools When using non-critical infrastructure like an LLM-powered chatbot, make sure to implement graceful degradation strategies. Instead of failing completely, this allows your app to respond intelligently to infrastructure outages, whether through fallback logic, informative user messages, or retry mechanisms when the service is back online.

If your method is consists of logic and IO, we recommend you isolate them to increase the testability of the logic.Take this for example (and see how we refactor it):

public static List<string> GetFilesInProject(string projectFile) { List<string> files = new List<string>(); TextReader tr = File.OpenText(projectFile); Regex regex = RegexPool.DefaultInstance[RegularExpression.GetFilesInProject]; MatchCollection matches = regex.Matches(tr.ReadToEnd()); tr.Close(); string folder = Path.GetDirectoryName(projectFile); foreach (Match match in matches) { string filePath = Path.Combine(folder, match.Groups["FileName"].Value); if (File.Exists(filePath)) { files.Add(filePath); } } return files; }Bad - The logic and the IO are coded in a same method

While this is a small concise and fairly robust piece of code, it still isn't that easy to unit test. Writing a unit test for this would require us to create temporary files on the hard drive, and probably end up requiring more code than the method itself.

If we start by refactoring it with an overload, we can remove the IO dependency and extract the logic further making it easier to test:

public static List<string> GetFilesInProject(string projectFile) { string projectFileContents; using (TextReader reader = File.OpenText(projectFile)) { projectFileContents = reader.ReadToEnd(); reader.Close(); } string baseFolder = Path.GetDirectoryName(projectFile); return GetFilesInProjectByContents(projectFileContents, baseFolder, true); } public static List<string> GetFilesInProjectByContents(string projectFileContents, string baseFolder, bool checkFileExists) { List<string> files = new List<string>(); Regex regex = RegexPool.DefaultInstance[RegularExpression.GetFilesInProject]; MatchCollection matches = regex.Matches(projectFileContents); foreach (Match match in matches) { string filePath = Path.Combine(baseFolder, match.Groups["FileName"].Value); if (File.Exists(filePath) || !checkFileExists) { files.Add(filePath); } } return files; }Good - The logic is now isolated from the IO

The first method (GetFilesInProject) is simple enough that it can remain untested. We do however want to test the second method (GetFilesInProjectByContents). Testing the second method is now too easy:

[Test] public void TestVS2003CSProj() { string projectFileContents = VSProjects.VS2003CSProj; string baseFolder = @"C:\NoSuchFolder"; List<string> result = CommHelper.GetFilesInProjectByContents(projectFileContents, baseFolder, false); Assert.AreEqual(15, result.Count); Assert.AreEqual(true, result.Contains(Path.Combine(baseFolder, "BaseForm.cs"))); Assert.AreEqual(true, result.Contains(Path.Combine(baseFolder, "AssemblyInfo.cs"))); } [Test] public void TestVS2005CSProj() { string projectFileContents = VSProjects.VS2005CSProj; string baseFolder = @"C:\NoSuchFolder"; List<string> result = CommHelper.GetFilesInProjectByContents(projectFileContents, baseFolder, false); Assert.AreEqual(6, result.Count); Assert.AreEqual(true, result.Contains(Path.Combine(baseFolder, "OptionsUI.cs"))); Assert.AreEqual(true, result.Contains(Path.Combine(baseFolder, "VSAddInMain.cs"))); }Good - Different test cases and assertions are created to test the logic

Some bugs have a whole history related to them and, when we fix them, we don't want to lose the rationale for the test. By adding a comment to the test that references the bug ID, future developers can see why a test is testing a particular behaviour.

[Test] public void TestProj11() { }Figure: Bad example - The test name is the bug ID and it's unclear what it is meant to test

/// Test case where a user can cause an application exception on the Seminars webpage 1. User enters a title for the seminar 2. Saves the item 3. Presses the back button 4. Chooses to resave the item See: https://server/jira/browse/PROJ-11 /// [Test] public void TestResavingAfterPressingBackShouldntBreak() { }Figure: Good example - The test name is clearer, good comments for the unit test give a little context, and there is a link to the original bug report

The need to build rich web user interfaces is resulting in more and more JavaScript in our applications.

Because JavaScript does not have the safeguards of strong typing and compile-time checking, it is just as important to unit test your JavaScript as your server-side code.

You can write unit tests for JavaScript using:

Jest is recommended since it runs faster than Karma (due to the fact that Karma runs tests in a browser while Jest runs tests in Node).

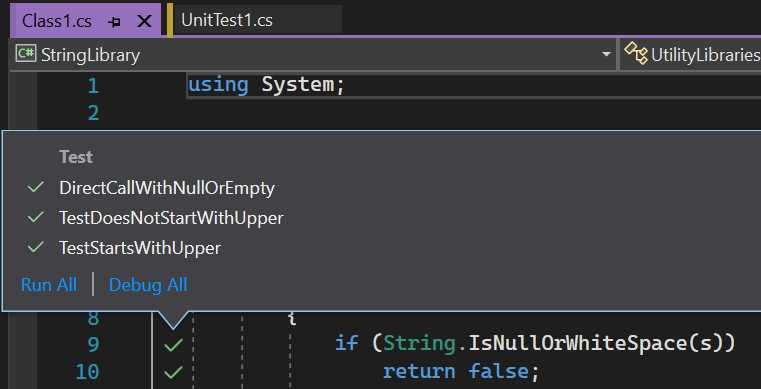

By enabling Live Unit Testing in a Visual Studio solution, you gain insight into the test coverage and the status of your tests.

Whenever you modify your code, Live Unit Testing dynamically executes your tests and immediately notifies you when your changes cause tests to fail, providing a fast feedback loop as you code.

Note: The Live Unit Testing feature requires Visual Studio Enterprise edition

To enable Live Unit Testing in Visual Studio, select Test | Live Unit Testing | Start

You can get more detailed information about test coverage and test results by selecting a particular code coverage icon in the code editor window:

Figure: This code is covered by 3 unit tests, all of which passed Tip: If you find a method that isn't covered by any unit tests, consider writing a unit test for it. You can simply right-click on the method and choose Create Unit Tests to add a unit test in context.

For more details see Joe Morris’s video on .NET Tooling Improvements Overview – Live Unit Testing.

If you store your URL references in the application settings, you can create integration tests to validate them.

Figure: URL for link stored in application settings Sample Code: How to test the URL

[Test] public void urlRulesToBetterInterfaces() { HttpStatusCode result = WebAccessTester.GetWebPageStatusCode(Settings.Default.urlRulesToBetterInterfaces); Assert.IsTrue(result == HttpStatusCode.OK, result.ToString()); }Sample Code: Method used to verify the Page

public class WebAccessTester { public static HttpStatusCode GetWebPageStatusCode(string url) { HttpWebRequest req = (HttpWebRequest)WebRequest.Create(url); req.Proxy = new WebProxy(); req.Proxy.Credentials = CredentialCache.DefaultCredentials; HttpWebResponse resp = null; try { resp = (HttpWebResponse)req.GetResponse(); if (resp.StatusCode == HttpStatusCode.OK) { if (url.ToLower().IndexOf("redirect") == -1 && url.ToLower().IndexOf(resp.ResponseUri.AbsolutePath.ToLower()) == -1) { return HttpStatusCode.NotFound; } } } catch (System.Exception ex) { while (!(ex == null)) { Console.WriteLine(ex.ToString()); Console.WriteLine("INNER EXCEPTION"); ex = ex.InnerException; } } finally { if (!(resp == null)) { resp.Close(); } } return resp.StatusCode; } }We've all heard of writing unit tests for code and business logic, but what happens when that logic is inside SQL server?

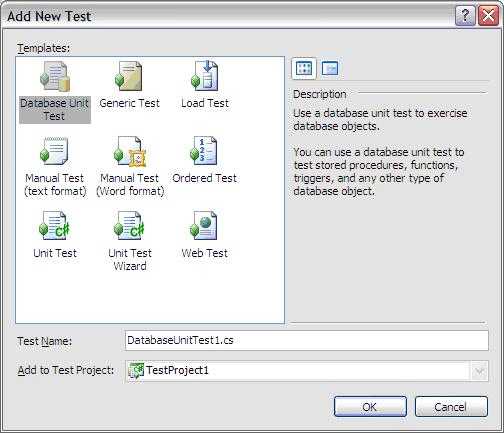

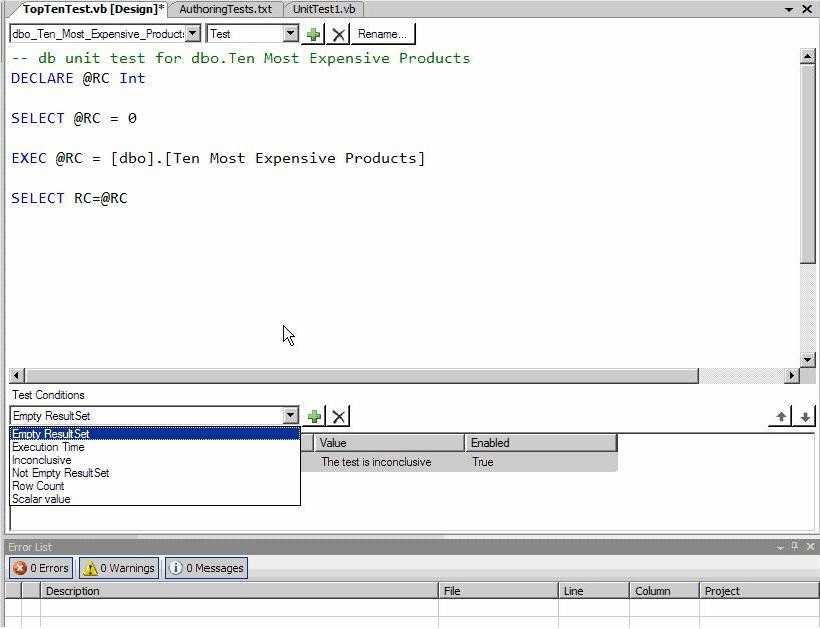

With Visual Studio, you can write database unit tests. These are useful for testing out:

- Stored Procedures

- Triggers

- User-defined functions

- Views

These tests can also be added to the same library as your unit, web and load tests.

Figure: Database Unit Test

Figure: Writing the unit test against a stored proc If you want to know how to setup database unit tests locally and in your build pipeline, check out this article: Unit Test Stored Procedures and Automate Build, Deploy, Test Azure SQL Database Changes with CI/CD Pipelines

It is difficult to measure test quality as there are a number of different available metrics - for example, code coverage and number of assertions. Furthermore, when we write code to test, there are a number of questions that we must answer, such as, "is the code easily testable?" and "are we only testing the happy path or have we included the edge cases?"

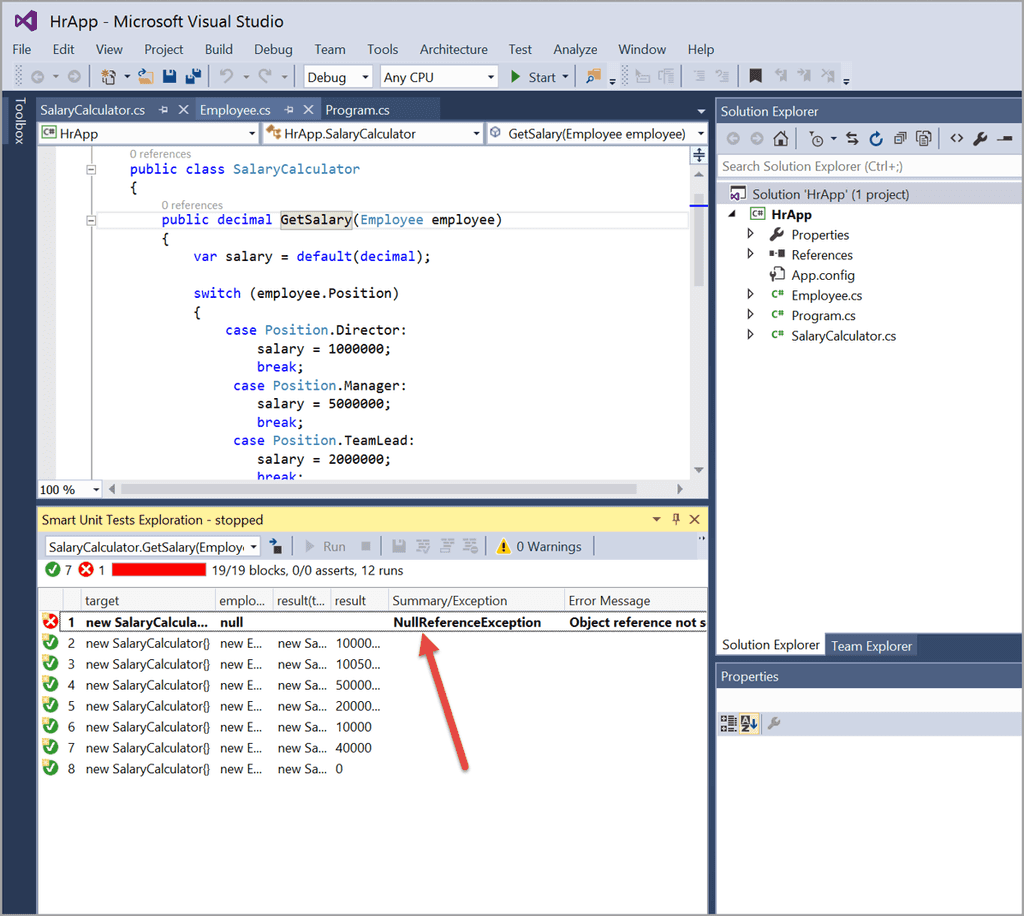

However, the most important question a dev can ask themselves is, "What assertions should I test?".

This is where IntelliTesting comes into play. The feature, formerly known as Smart Unit Testing (and even more formerly known as PEX), will help you answer this question by intelligently analyzing your code. Then, based on the information gathered, it will generate a unit test for each scenario it finds.

In short, by using IntelliTest, you will increase code coverage, greatly increase the number of assertions tested, and increase the number of edge cases tested. By adding automation to your testing, you save yourself time in the long run and reduce the risk of problems in your code caused by simple human error.

Automated UI testing tools like Playwright and Selenium are great for testing the real experience of the users. Unfortunately, these tests can sometimes feel a bit too fragile as they are very sensitive to changes made to the UI.

Subcutaneous ("just beneath the skin") tests look to solve this pain point by doing integration testing just below the UI.

Martin Fowler was one of the first people to introduce the concept of subcutaneous tests into the mainstream, though it has failed to gather much momentum. Subcutaneous tests are great for solving problems where automated UI tests have difficulty interacting with the UI or struggle to manipulate the UI in the ways required for the tests we want to write.

Some of the key qualities of these tests are:

- They are written by developers (typically using the same framework as the unit tests)

- They can test the full underlying behaviour of your app, but bypass the UI

- They require business logic to be implemented in an API / middle layer and not in the UI

- They can be much easier to write than using technologies that drive a UI, e.g. Playwright or Selenium

The Introduction To Subcutaneous Testing by Melissa Eaden provides a good overview of this approach.

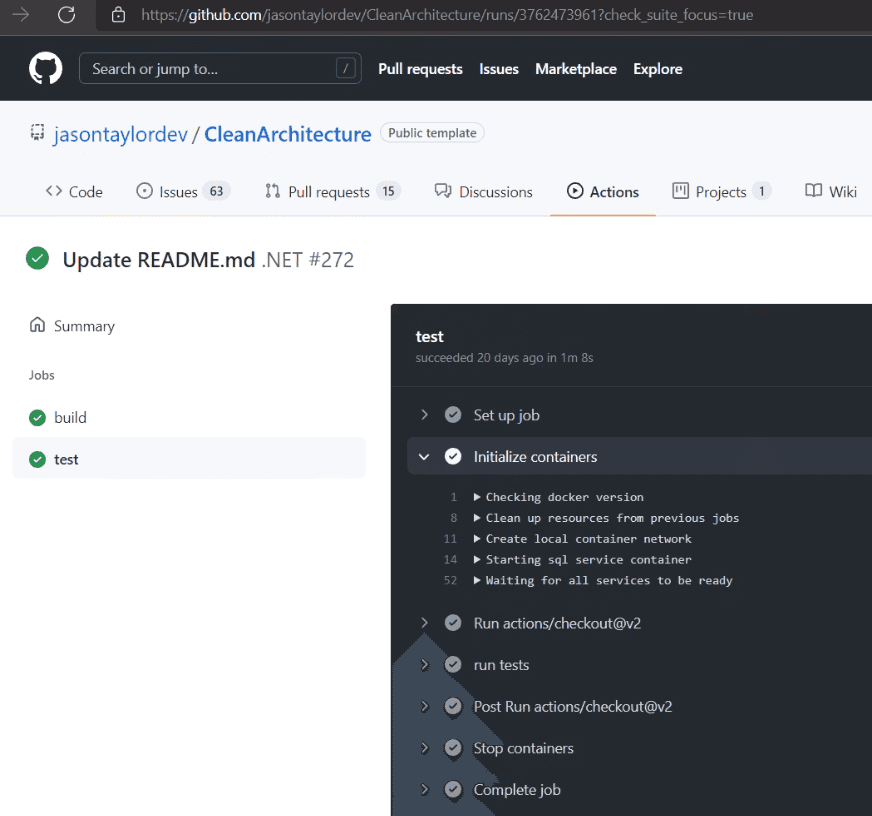

Integrate with DevOps

The gold standard ⭐ is to automatically run subcutaneous tests inside your DevOps processes such as when you perform a Pull Request or a build. You can do this using GitHub Actions or Azure DevOps.

Every test should reset the database so you always know your resources are in a consistent state.

Jason Taylor has a fantastic example of Subcutaneous testing in his Clean Architecture template.

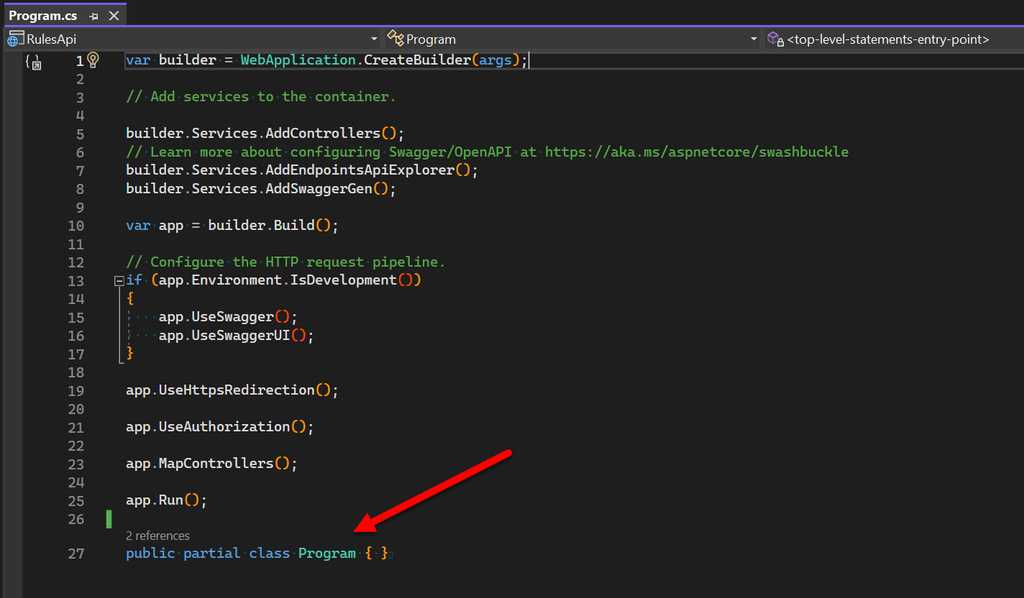

The

WebApplicationFactoryclass is used for bootstrapping an application in memory for functional end to end tests. As part of the initialization of the factory you need to reference a type from the application project.Typically in the past you'd want to use your

StartuporProgramclasses, the introduction of top-level statements changes how you'd reference those types, so we pivot for consistency.Top level statements allows for a cleaner

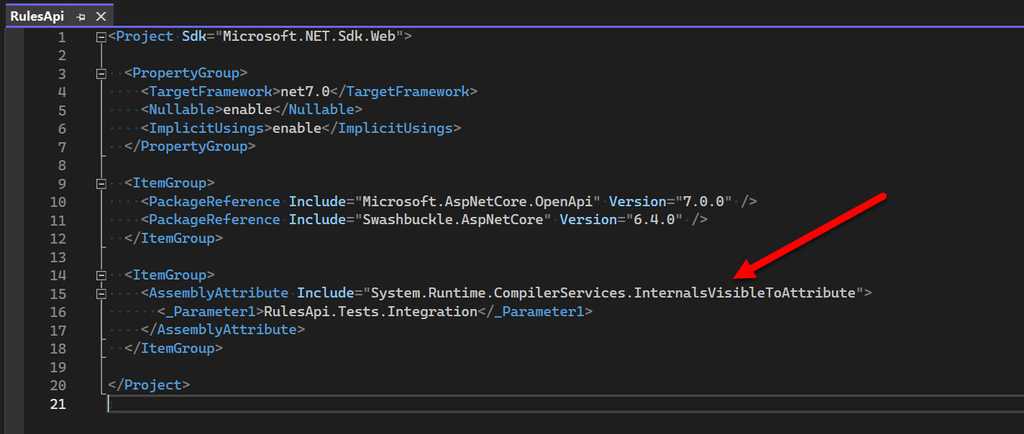

Programclass, but it also means you can't reference it directly without some additional changes.Option 1 - Using InternalsVisibleTo attribute

Adding the

InternalsVisibleToattribute to the csproj is a way that you'd be able to reference theProgramclass from your test project.This small change leads to a long road of pain:

- Your

WebApplicationFactoryneeds to be internal - Which means you need to make your tests internal and

- In turn add an

InternalsVisibleTotag to your test project for the test runner to be able to access the tests.

Option 2 - public partial program class

A much quicker option to implement is to create a partial class of the

Programclass and make it public.This approach means you don't need to do all the InternalsVisibleTo setup, but does mean you are adding extra none application code to your program file which is what top level statements is trying to avoid.

Option 3 - Using an IApiMarker interface (recommended)

The

IApiMarkerinterface is a simple interface that is used to reference the application project.namespace RulesApi; // This marker interface is required for functional testing using WebApplicationFactory. // See https://www.ssw.com.au/rules/use-iapimarker-with-webapplicationfactory/ public interface IApiMarker { }Figure: Good example - Using an

IApiMarkerinterfaceUsing the

IApiMarkerinterface allows you reference your application project in a consistent way, the approach is the same when you use top level statements or standard Program.Main entry points.- Your